Chapter 15

Auditory image fundamentals

15.1 Introduction

In the previous chapters, we established a theoretical and quantitative analytical basis for temporal imaging in the auditory system, along with a firm basis for an auditory-relevant notion of coherence. The original temporal imaging theory applies directly to individual samples or pulses, but we qualitatively extended it to include nonuniform sampling, in order to make better use of the modulation transfer functions that can be applied over longer durations than single samples. It highlighted the distinction between coherent and incoherent sound, which can be traced to the inherent defocus of the auditory system (and its preferential phase locking), and is supported by empirical data about the temporal modulation transfer functions. This distinction indicates that there is an increased sensitivity to high frequencies that modulate coherent carriers in comparison to incoherent carriers.

There appears to be a built-in system that may be more optimal for coherent than for incoherent sound detection, insofar as the within-channel imaging is analyzed. This is so because of the relative broader bandwidth of the coherent modulation transfer function (MTF), which is completely flat in the effective passband—at modulation frequencies that do not get resolved in adjacent filters. In contrast, incoherent carriers carry random modulation noise at low frequencies and tend to have poorer sensitivity, unless information from several channels is pooled together. These observations are not trivially integrated when it comes to the design of the complete auditory imaging system. Analogy to spatial imaging in vision is also not helpful, as vision is strictly incoherent. In fact, incoherent-illumination imaging normally produces superior images to coherent illumination, which produces images that are much more sensitive to diffraction effects and speckle noise (e.g., dust particles on the objects or on the system elements that become visible in coherent illumination). Additionally, incoherent imaging is strictly intensity-based (i.e., linear in intensity), whereas coherent imaging is amplitude-based (linear in amplitude), although its final product is usually an intensity image as well. Direct comparison between visual and auditory imaging can only be made based on intensity images, but because of the longstanding confusion about the role of phase in hearing (§6.4.2), it is not immediately clear that amplitude imaging does not have a role in hearing and that the comparison is valid.

Many natural sound sources are coherent, but the acoustic environment and medium tend to decohere their radiated sound through reflections, dispersion, and absorption (§3.4). Other sounds of interest are generated incoherently at the source, but their received coherence depends on the bandwidth of the sound and the filters that analyze it. Therefore, we would like to formulate the action of auditory imaging, so that it can differentially respond to arbitrary levels of signal coherence. The effects of complete coherence or incoherence are well known (mainly for stationary signals), but much of the intuition in them may be lost because they are usually not framed as part of an auditory-relevant coherence theory. This means that for the majority of signals that are neither coherent nor incoherent there are no presently available heuristics that can be used to analyze them. We would like to provide some conceptual tools that bridge this gap in intuitive understanding of realistic acoustic object imaging.

This chapter follows a broad arc that encompasses several key topics in auditory imaging. It begins with discussions about sharp and blurry auditory images, which enables us to make sense out of the substantial defocus that is built into the auditory system. Suprathreshold imaging is then discussed, based on an extrapolation of threshold-level masking responses. The notion of polychromatic images is applied to hearing by way of analogy with a number of known phenomena that are reframed appropriately to support it. The special case of acoustic objects that elicit pitch is briefly reviewed and is also reframed with imaging in mind. Then, several sections deal with various polychromatic and monochromatic aberrations, as well as an interpretation of the depth of focus of the system. Finally, we provide a few rules of thumb that aid the intuition of how images are produced in the system.

15.2 Sharpness and blur in the hearing literature

Sharpness and blur are central concepts when discussing the optics of the eye (e.g., Le Grand and El Hage, 1980; Packer and Williams, 2003, pp. 52–61) and imaging systems in general. If a visual system does not produce sufficiently sharp images due to blur, it can cause various levels of disability if not corrected. Therefore, identifying the auditory analogs of sharpness and blur—should they exist—may be a powerful stepping stone in understanding how the ear works and where things can go wrong.

Currently, there is no analogous concept in psychoacoustics for sharpness that resembles the optical one and references to it in the auditory literature are scarce. Sharpness was introduced in psychoacoustics as a consistently large factor of timbre (von Bismarck, 1974). Ranging on a subjective scale between dull and sharp, sharpness was modeled using the first moment of the sum of the loudness function in all critical bands, where the high frequency content above 16 Bark (3.4 kHz) affected the rated sharpness of the stimuli tested—typically, noise and complex tones (Fastl and Zwicker, 2007; pp. 239–243). These conceptualizations of psychoacoustic sharpness appear to be irrelevant to the present discussion.

The antonymous notion of sharpness, blur, is more frequently encountered in the hearing literature, and is closer to how it is used in imaging. It is perhaps because of the association of the convolution with blurring operations that makes this term somewhat more commonplace in hearing research (e.g., Stockham et al., 1975). For example, blur is occasionally invoked in the context of modulation transfer function fidelity that is impacted by room acoustics (Houtgast and Steeneken, 1985), or through manipulation of the speech envelope through modulation filtering (Drullman et al., 1994a; Drullman et al., 1994b). Similarly, in bird vocalizations, the in-situ degradation of envelope patterns over time and distance were quantified and referred to as blur (Dabelsteen et al., 1993). Another typical usage was exemplified by Simmons et al. (1996), who referred to the blurring effects of the long integration window on the perception of minute features in the echoes perceived by bats over durations shorter than 0.5 ms. Similar references to temporal or spectral blur occasionally appear in the hearing literature. For example, Carney (2018) raised the question of whether there is an auditory analog to the visual accommodation system that reacts and corrects blur to achieve (attentional) focus.

A single study tested the focus of sound sources directly. A subjective rating of the perceived source focus of anechoic speech superposed either with a specular or with a diffuse reflection was obtained in Visentin et al. (2020). Subjects were instructed that focus “should be considered as the distinction between a “clear” or “well-defined” sound source and a “blurred” sound image.” Two clear patterns were observed. First, when the angle of diffuse reflection was increased (from \(34^\circ\) to \(79^\circ\)), the rated focus dropped to the point that it became equal to the specular reflection. Likely, at large angles the reflection was coherent-like and interfered with the source definition. Similarly, the rated focus was also correlated with the interaural time difference (ITD) averaged over 500, 1000, and 2000 Hz octave bands. So the focus was rated highest for ITD when it was about 0. As was argued in §8.5, the ITD directly quantifies spatial coherence. So maximum coherence correlated with the maximum perceived focus, as long as the source direction is unambiguous. Incidentally, the focus highly correlated (\(r=0.84\)) with speech intelligibility, which was also highly correlated with rated loudness.

Clarity is an altogether different concept, which is probably associated with sharpness to some extent, and is more common in different audio-quality and audiometric evaluations. It was designated as a fundamental component of hearing-aid performance that is hampered by noise and distortion (Katz et al., 2015; p. 61)—two factors that affect the imaging quality independently (Blackledge, 1989; pp. 8–9). In room acoustics, clarity (\(C_{80}\)) is often used to estimate the power of the earliest portion of the room impulse response in which single reflections are still relatively prominent, in comparison with the late portion (after 80 ms) (Kuttruff, 2017; pp. 169–170). This quantity has been used to estimate the sound transparency in concert hall acoustics. Clarity is also used more informally in audio quality evaluations (Bech and Zacharov, 2006; Toole, 2009), and was defined by Bech and Zacharov (2006) as: “Clarity—This attribute describes if the sound sample appears clear or muffled, for example, if the sound source is perceived as covered by something.”. Muffled sounds often suggest high frequency content roll-off due to absorption—perhaps the opposite quality to Bismarck's sharpness. High modulation frequency roll-off is also a common feature of blur in spatial imaging, as the removal of high spatial frequencies causes the blur of sharp edges.

15.3 Sharpness, blur, and aberrations of auditory images

In vision, sharpness characterizes static images and can be extended to moving images without much difficulty. In hearing, even static images (with constant temporal envelopes, as pure tones) unfold over time, which is physically, perceptually, and conceptually unlike images of still visual objects, despite the mathematical parallels garnered by the space-time duality. Although we now have the imaging transform of a single pulse or sample, the short duration of the aperture does not truly allow for any appreciation of the image sharpness. Therefore, it is only through the concatenation of samples over time that auditory sharpness can be sensibly established. Still, it is much simpler to analyze the conditions for the loss of sharpness—the creation of blur—than those that give rise to sharpness. If the sources of auditory blur are negligible or altogether absent, relative sharpness can be argued for and established. In other words, we can define auditory sharpness by negation: The auditory image is sharp when different sources of image blur are either negligible or imperceptible. Therefore, the remainder of this chapter is dedicated to elucidating the different forms of blur and related aberrations that can be found in human hearing.

15.3.1 The two limits of optical blur

In both spatial and temporal imaging, the information about blur is fully contained in the impulse response function (or the point spread function of the two spatial dimensions), which relates a point in the object plane to a region in the image plane. In two dimensions, the effect of blur is to transform a point into a disc. As we saw in §13, the point spread function is fully determined by the pupil function of the imaging system and, specifically, it depends on the neural group-delay dispersion (analogous to the distance between the lens and the screen in spatial optics).

There are two limits that characterize the possible blur in the image. When the aperture is large compared to the light wavelength, the image is susceptible to geometrical blur. It can be explained by considering the different paths that exist between a point of the object to the image, which do not all meet in one mathematical point. Thus, in geometrical blur, multiple non-overlapping copies of the image are overlaid in the image plane. Consequently, the fine details and sharp edges of the object are smeared and the imaged point appears blurry. Geometric blur can be further specified according to the exact transformation that causes an object point to assume a distorted shape on the image plane. These distortions are called aberrations and among them, defocus is the simplest one that causes blur.

In the other extreme, when the aperture size is comparable or smaller than the wavelength, the image becomes susceptible to effects of diffraction. A point then turns into a disc with oscillating bands of light and shadow (fringes), which makes fine details less well-defined, and hence, blurry.

The aperture size for an ideal imaging system should be designed to produce blur between these two limits. An image that does not have any aberrations is referred to as diffraction-limited.

15.3.2 Contrast and blur

It is worthwhile to dwell on the two image fidelity characteristics that often appear together—contrast and blur—and elucidate their differences. Contrast quantifies the differences in intensity between the brightest and darkest points in the image, or a part thereof. Hence, it is a measure of the dynamic range of the image, which ideally maps the dynamic range of the object, so that intensity information is not lost in the imaging process. As the image is made of spatial modulations, contrast is quantified with visibility (Eq. §7.1), which we also referred to as modulation depth (§6.4.1).

Blur refers to the transformation that the image undergoes that makes it different from a simple scaling transformation of the object. The effects of geometrical and diffractive blurs are not the same here, though. In geometrical blur, the envelope broadens, as spectral components share energy with neighboring components and overall distort the image, while retaining its general shape. In diffractive blur, new spectral components can emerge in the envelope that are not part of the original object, but appear due to wave interaction with small features in the system or object that have similar dimensions to the wavelength of light carrier. In both cases, both the envelope and its spectrum change due to blur.

While blur and contrast can and often interact, they represent two different dimensions of the imaging system and we will occasionally emphasize one and not the other. Contrast does not interact directly with the spectral content of the image, whereas blur does. Note that when the envelope spectrum is imaged, it is scaled with magnification, which is a transformation that is integral to the imaging operation and does not entail blur or contrast effects.

15.3.3 Auditory blur and aberrations

The temporal auditory image blur can be understood in analogous terms to spatial blur by substituting the aperture size with aperture time and diffraction with dispersion. Thus, the temporal image can be geometrically blurred if the aperture time is much longer than the period of the sound. If it has no aberrations, then it can be considered to be dispersion-limited. A very short aperture with respect to the period may produce audible dispersion effects, at least when fine sound details are considered. Thus, a good temporal imaging design should strike a balance between geometrical blur and blur from dispersion. In fact, what we saw earlier is that the system is set far from this optimum, since it is significantly skewed toward a geometrical blur, which is seen in the significant defocus we obtained—an aberration (§12.3, §12.5, and §13.2.2).

However, things get more complicated with sound in ways that do not have analogs in vision. The most significant difference, as was repeatedly implied throughout the text, is that unlike visual imaging that is completely incoherent, sound is partially coherent, but some of the most important sounds to humans have strong coherent components in them. As was shown in §13.4 and will be discussed in §15.5, the defocus blurs incoherent objects more than it does coherent ones. Thus, it can be used to differentiate types of coherence by design, instead of achieving nominally uniform blur across arbitrary degrees of input coherence.

The second complication in sound is due to the nonuniform temporal sampling by the auditory nerve that replaces the fixed (yet still nonuniform) spatial sampling in the retina. The loss of high modulation frequency information from the image because of insufficient (slow) sampling rate (and possibly other factors) can be a source of blur as well, which is neither dispersive nor geometrical per se. This effect will not be considered here beyond the earlier discussion about its effects on the modulation transfer function in §14.8.

Another nonstandard form of geometrical blur may occur if the sampling rate and the aperture time are mismatched, so that consecutive samples overlap (i.e., the duty cycle is larger than 100%). Effectively, this kind of blur is produced outside of the auditory system in the environment through reverberation, where multiple reflections of the object are superimposed in an irregular manner that decoheres the signal (§3.4.4).

A combined form of geometrical and dispersive blur is caused by chromatic aberration—when the monochromatic images from the different channels are not exactly overlapping or synchronized, which gives rise to the blurring of onsets and other details. Virtually all polychromatic (broadband) optical systems have some degree of chromatic aberration and the possibility of encountering it in hearing will be explored in §15.10.

Image blur may be caused by other aberrations, as a result of the time lens imperfect quadratic curvature, when its phase function contains higher-order terms in its Taylor expansion (see Eq. §10.27). Similarly, phase distortion may be a problem in the group dispersive segments of the cochlea or the neural pathways, if their Taylor expansion around the carrier (Eq. §10.6) has higher terms (Bennett and Kolner, 2001). While this large family of aberrations is very well-studied in optics and vision, the existence of their temporal aberrations in hearing is difficult to identify at the present state of knowledge. They will be explored in §15.9.

In general, blurring effects can be also produced externally to the temporal imaging system. Outside of the system, it can accrue over large propagation distances, and likely occurs in turbulent atmospheric conditions (§3.4.2). Phase distortion can also be the result of individual reflections from surfaces (§3.4.3). As was reviewed in §11.2, the plane-wave approximation gradually breaks down in the ear canal above 4 kHz, which means that modulation information may be carried by different modes with different group velocities. This situation causes a so-called dispersion distortion in optics (§10.4), and may theoretically cause distortion and blur also in hearing at high frequencies.

15.4 Suprathreshold masking, contrast, and blur

Few auditory phenomena have received more attention in psychoacoustic research than masking. Beyond the curious nature of its effects, interest has stemmed from the usefulness of masking in indirectly estimating many hearing parameters and thereby inferring various aspects regarding the auditory system signal processing. Additionally, it has been implied that masking effects can be generalized to everyday hearing and can significantly impact its outcomes—especially with hearing impairments.

The definition of masking normally refers to an increase in the threshold of a stimulus in the presence of another sound (Oxenham, 2014). However, the change in threshold can be caused by more than one process and several peripheral and neural mechanisms have been considered in literature in different contexts (Oxenham, 2001; Moore, 2013). But since the discussion of masking is strictly framed around the change of threshold, it leaves out a no-less important discussion about how audible, or suprathreshold, signals sound in the presence of masking. Put differently, a complete knowledge of the masking threshold does not necessarily mean that the suprathreshold signal combined with the masker is going to sound identical to a lower-level version of the original signal in the absence of masking.

We can think of four general classes of interactions between masker and signal, or even more generally, between any two acoustic objects.

The first class of masking relates to masking that only causes the signal to sound less intense, while it is otherwise unchanged when it is presented above its masking threshold. This effect can be analogized to the apparent dimming of one object in the presence of another (e.g., viewing a remote star in broad daylight). In a perfectly linear system, amplification of the dim target leads to its perfect recovery with no distortion or loss of information. When the system exhibits (nonlinear) dynamic range compression, perfect recovery of the envelope requires variable amplification (i.e., expansion) and may be impossible to realize in practice. When the sound in question is modulated, this is akin to loss of contrast—the difference between the envelope maximum and minimum. Loss of contrast can also happen if only part of the modulated sound is masked, whereas the rest is above threshold. Or, it can take place if the loudest parts of the sound saturate and do not allow for a linear mapping of intensity. In any case, this type of masking is strictly incoherent, as the signal and masker only interact by virtue of intensity superposition.

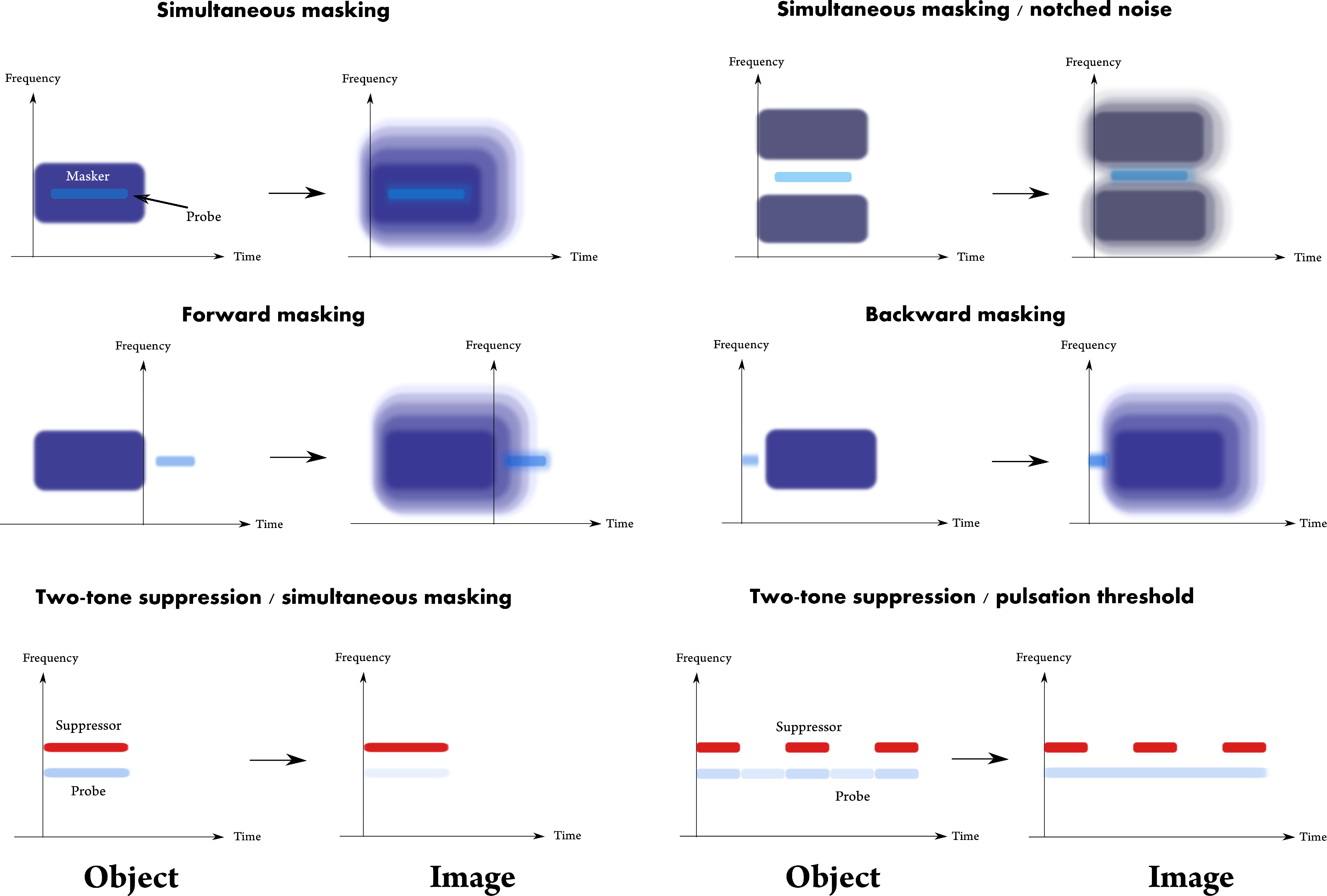

In the second class of masking, the suprathreshold signal interacts with the masker and its fine details change as a result, even if they are still recognizable as the target sound. This can obviously happen only if the two sounds interfere, which is possible when they are mutually coherent or partially coherent within the aperture stop of the relevant channel(s). As this phenomenon involves interference, the corresponding optical analogy here is diffraction blur. Note that this analogy does not specify the conditions for an interference-like response, which is usually referred to as suppression phenomena in hearing. Suppression is thought to involve nonlinearities, which extend beyond the normal bandwidth of the auditory channel. In this case, the response may not be obviously interpreted as interference, since it can also be caused by inhibition in the central pathways, which may produce a similar perceptual effect, but have a different underlying mechanism. In his seminal paper of two-tone suppression masking, Houtgast (1972) compared it to Mach bands in vision, which appear as change in contrast around the object edge, although it is not caused by interference, but rather by inhibition. And yet, it is now known that suppression is cochlear in origin and has indeed been shown to be caused by the nonlinear cochlear dispersion (Charaziak et al., 2020), as we should expect from the space-time analogy between diffraction (interference) and dispersion. Unlike the loss of contrast, the resultant image here does not necessarily involve loss of information, only that some information may be difficult to recover after interference.

The third class of masking involves “phantom” sounds, whose response persists in the system even after the acoustic masker has terminated. This nonsimultaneous (forward or backward) masking is measurable in the auditory pathways and is not exclusively a result of cochlear processing that “recovers” from the masker (e.g., when the compression is being released, or after adaptation is being reset due to replenishment of the synapses with vesicles; Spassova et al., 2004). The existence of nonsimultaneous suppression effects appears to be much shorter than the decay time of forward masking (Arthur et al., 1971), so that even if it is measurable over a short duration after the masker offset, it is unlikely to be in effect much later. However, short-term forward entrainment effects in the envelope domain have been sometimes demonstrated in cortical measurements (Saberi and Hickok, 2021). This means that suprathreshold sounds playing during the perceived masker decay may be comparable to those under weak (decayed) masking of the first class of incoherent sounds, since the sounds do not directly interact and may only result in loss of contrast. Although physically and perceptually it is nothing of the sort, the forward masking decay effectively produces a similar interaction effect that would be experienced in sound reverberation. The reverberation decay is incoherent (§8.4.2) and itself produces an effect that resembles geometrical blur (§15.3.3). However, since the effect is internal to the auditory system, perhaps the adjective “fuzzy” might describe the masking objects better than blurry.

A fourth class of maskers does not belong to any of the above, which are referred to as energetic masking effects. Informational masking has been a notable effect, where sounds are masked in a way that cannot be explained using energetic considerations only. It appears to have a central origin that is physiologically measurable as late as the inferior colliculus (Dolležal et al., 2020). The analogous effect here is of deletion: elements from the original objects do not make it to the image and are effectively eliminated from it. The type of maskers and stimuli involved in these experiments are usually tonal—multiple short tone bursts scattered in frequency (Kidd Jr et al., 2008). Each tone burst that is simultaneously played with such a masker may be comparable to a type of coherent noise that is called speckle noise—distinct points that appear on the image because of dust on the imaging elements, for example, but do not belong to the object of interest. Speckle noise can be effectively removed through incoherent or partially coherent imaging, which averages light that arrives from random directions so that small details like dust do not get imaged (for example, compare the coherent and incoherent images in Figures §8.3 and §9.6). In hearing, the removal of details like tones in informational masking tests may stem from dominant incoherent imaging. If this is so, then suprathreshold sounds under informational masking may suffer some geometrical blur. Interestingly, not all listeners exhibit informational masking, so some studies preselect their subjects accordingly (Neff et al., 1993). Note that the time scales involved here may be longer than those that relate to a single pulse-image that is coherent or incoherent, which may require integration over longer time scales. Nevertheless, the logic for all types of images should be the same.

In realistic acoustic environments, we should in all likelihood expect to continuously encounter all types of masking in different amounts, but using much more complex stimuli. If the analogies above have any merit, then they entail that masking does not only dictate the instantaneous thresholds of different sound components in the image, but it also determines their suprathreshold contrast (available dynamic range) and relative blur. It may strike the reader as sleight of hand to be appropriating this host of well-established masking effects into the domain of imaging. However, it should be underlined that the emphasis is on signals above the masking threshold, which are what is being perceived, not at and below the thresholds which usually receive much of the attention in research. This intermingling of imaging and masking terminologies will enable us to make occasional use of the vast trove of masking literature that has been accumulating over a century of investigations.

15.5 The auditory defocus

The inherent auditory defocus may have been the most unexpected feature that was uncovered in this work, since one of its original goals was to show how normal hearing achieves focus, in close analogy to vision. But the unmistakable presence of a substantial defocus term is a divergence from vision theory. Interpreting its meaning requires further input from both Fourier optics and coherence theories.

Valuable insight may be gathered from two optical systems, where defocus is employed intentionally, either to blur unwanted objects, or to achieve good focus with an arbitrary depth of field. The first case, which was already presented in §1.5.1 and Figure §1.2, is the most common form of illumination in microscopy (Köhler illumination), where a small specimen is the object that is mounted on a transparent slide between the light source and the observer (Köhler, 1893). The function of the microscope requires that the image of the object on the observer's retina is in sharp focus, whereas the image of the light source (usually a thin filament) should be nonexistent. This is achieved by placing the object beyond the focal point of the condenser lens that converts the point source radiated light to parallel plane waves. Because the source of light is spatially coherent, if its image is not sufficiently diffused and defocused at the object plane, then an image of the filament would be projected on the object (the specimen) and give rise to a distorted image. In hearing, the design logic is diametrically opposite, as the acoustic sources are of prime interest, whereas the passive objects that only reflect sound are of much less importance, relative to the sources.

A second system that employs a similar principle is an optical head-mounted display for producing visible text that is overlaid on whatever visual scene the wearer is looking at (von Waldkirch et al., 2004). By applying defocus and using partially coherent light to produce the text, it is possible to create a sharp image of the letters regardless of the accommodation of the eye (§16.2), as accommodation reacts only to incoherent light. If the text is lit incoherently as well, then it becomes extremely difficult to keep it sharp with accommodation continuously modifying the focal length of the lens. In contrast, in hearing, coherence itself is a parameter of the sound source and its environment. Using defocus, the hearing system may be able to differentiate the amount of blur assigned to different signals types (or signal components) according to their degree of coherence. It is possible also that a partially-coherent image is obtained from coherent and incoherent imaging pathways, which are combined to produce an optimal quality with an appropriate amount of blur.

The effect of defocus was indirectly examined in §13.4 through its effects on the modulation transfer function (MTF) for incoherent and coherent inputs141. Because of the relatively high cutoff frequencies associated and the effect of the nonuniform neural downsampling in the system, it was difficult to identify many interesting test cases that differentiate the two coherence extremes. This is largely because the auditory filters overlap and normally do not run into the modulation bandwidths associated with these MTFs. Nevertheless, the analysis enabled us to predict that differentiation between coherent states is dependent on the input bandwidth, as the degree of coherence is inversely proportional to the spectral bandwidth of the signal.

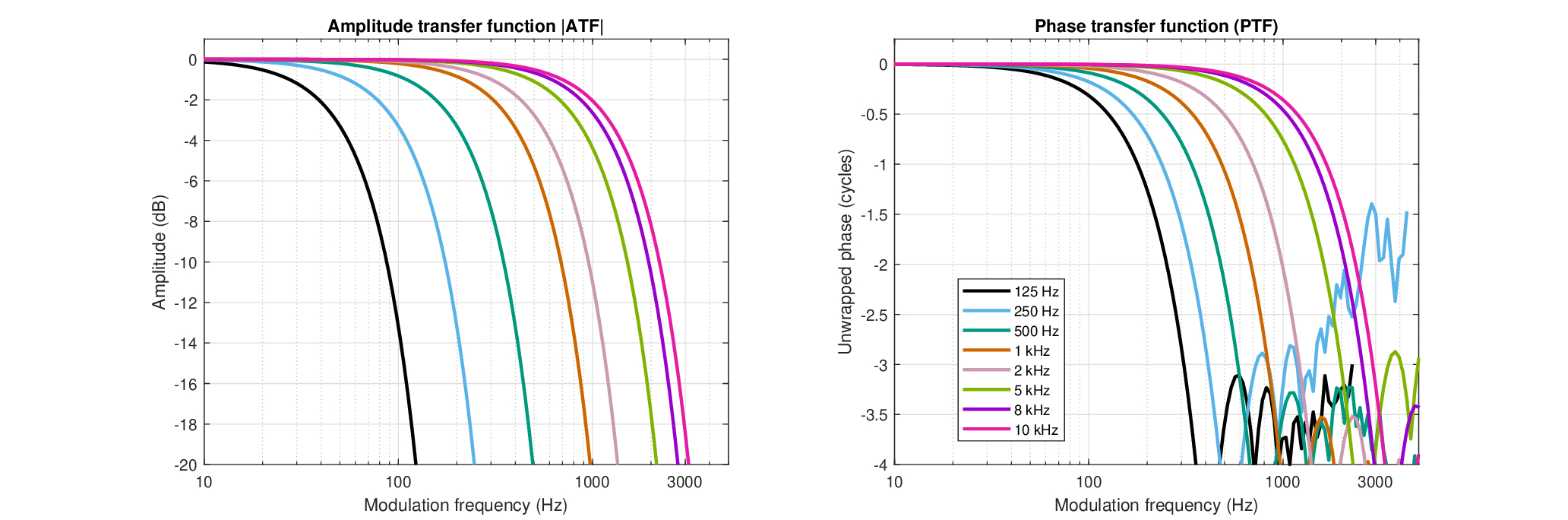

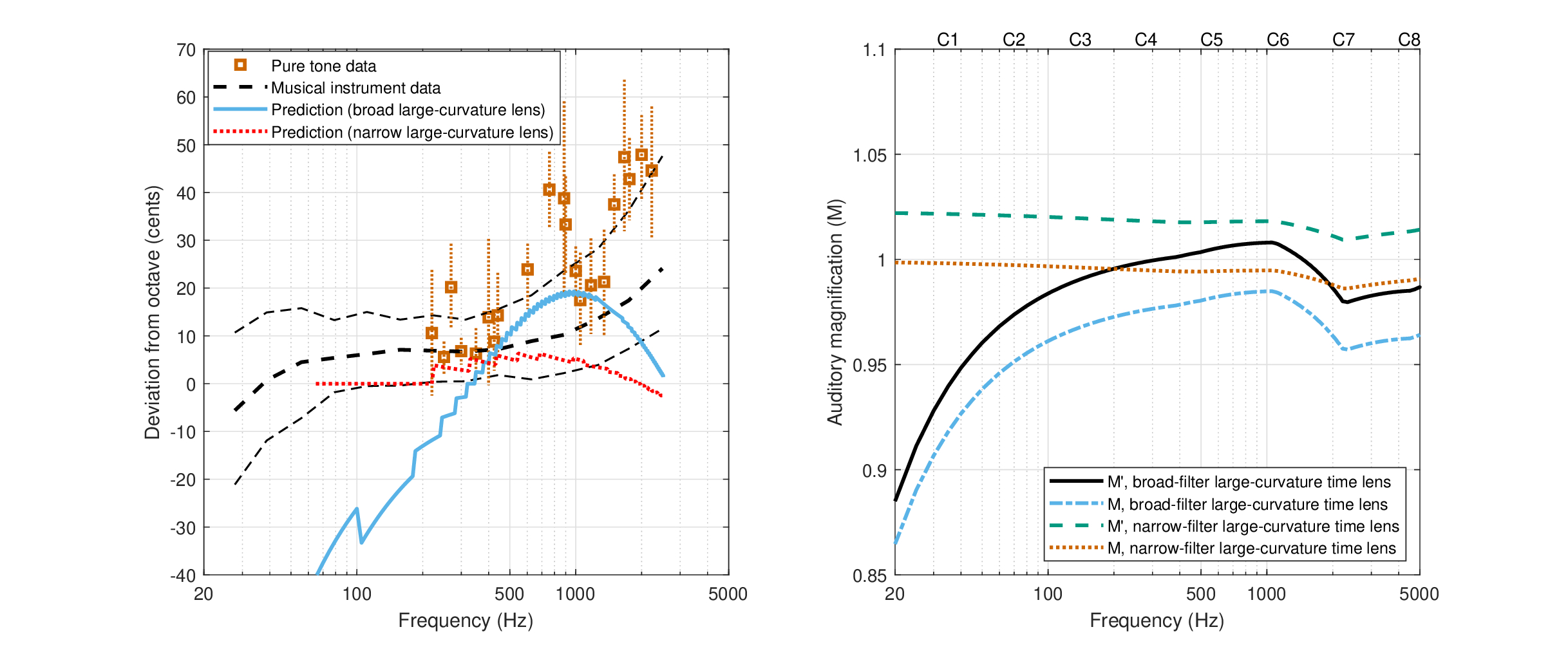

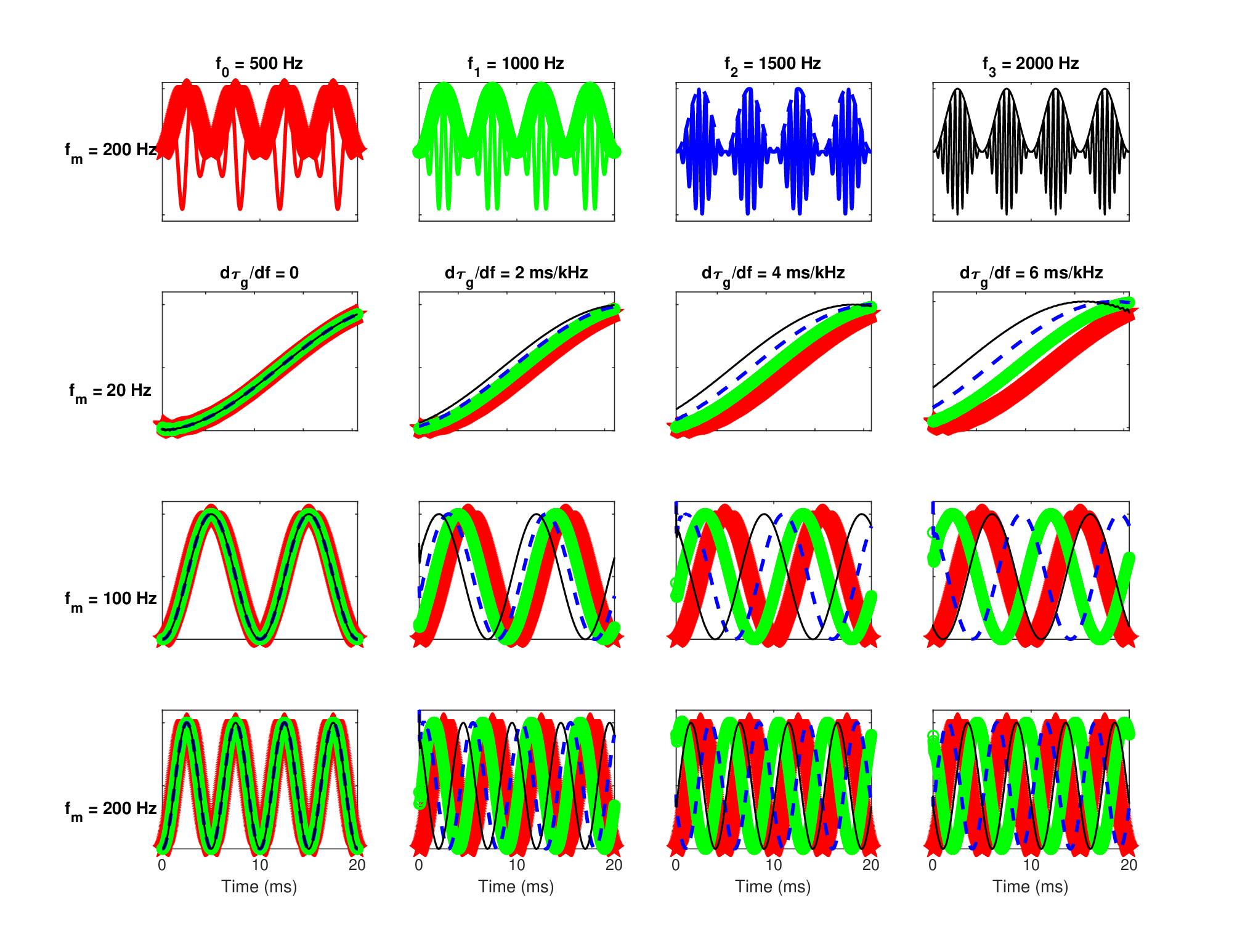

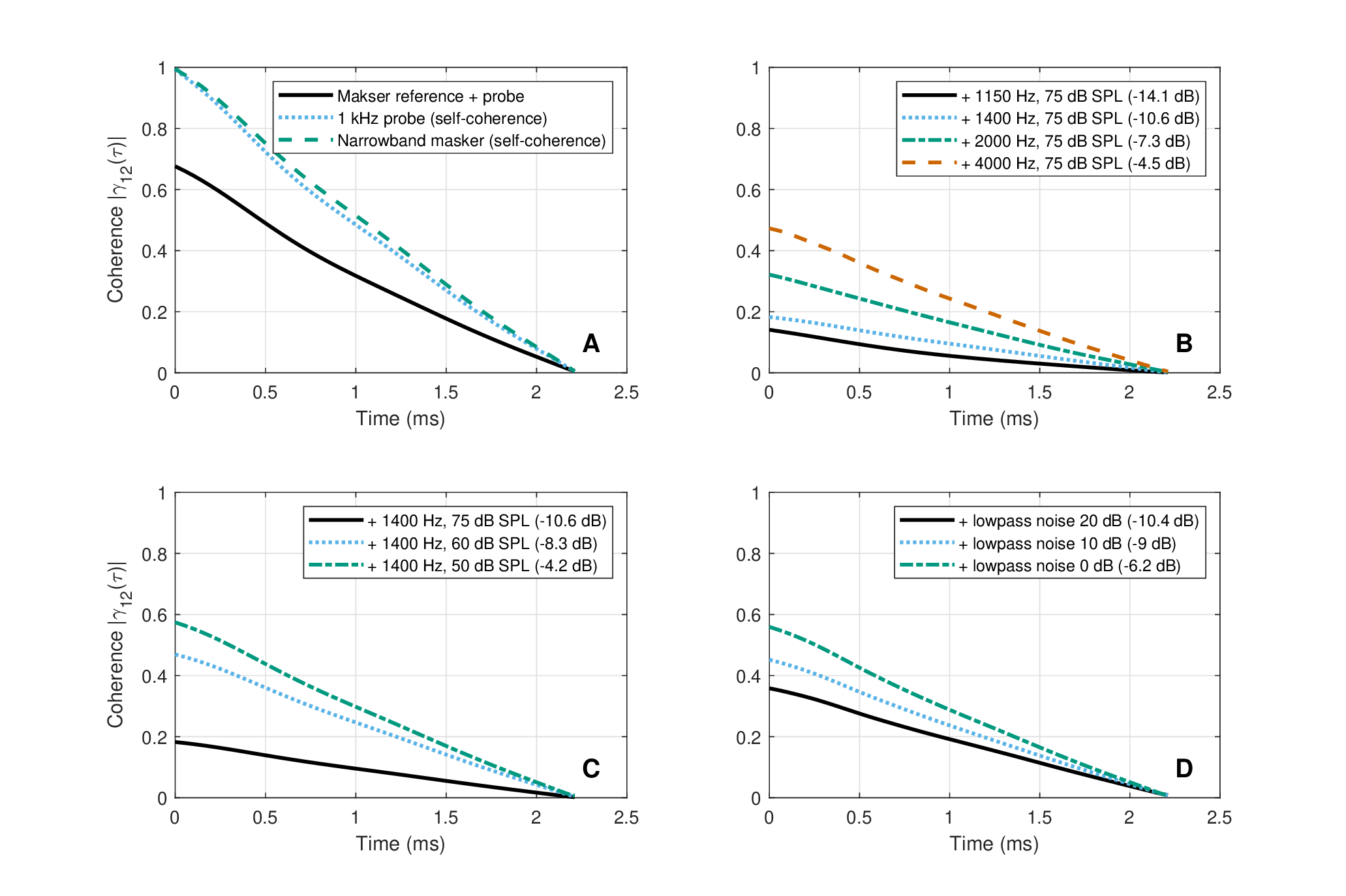

The (coherent) amplitude transfer function (ATF) and phase transfer function (PTF) are plotted in Figure 15.1. Remembering that partially coherent signal intensity can be expressed as the sum of the coherent and incoherent intensities (Eq. §8.21), these functions apply also to the coherent component in partially coherent signals. Wherever the signals become truly incoherent, the phase response of the function becomes meaningless, and it is reduced to the familiar optical transfer function (OTF) or MTF. In pure tones too, which are completely coherent, the PTF is inconsequential as it phase-shifts the tone by a constant factor, which cannot be detected by the ear without additional interfering carriers. Therefore, the best test cases for the defocus may be signals of finite bandwidth that are sensitive to phase, where the power spectrum model breaks down.

Figure 15.1: The estimated amplitude transfer function (ATF, left) and (unwrapped) phase transfer function (PTF, right) of the human auditory system (Eq. §13.25), using the parameters found in §11 and low-frequency corrections from §12.5.

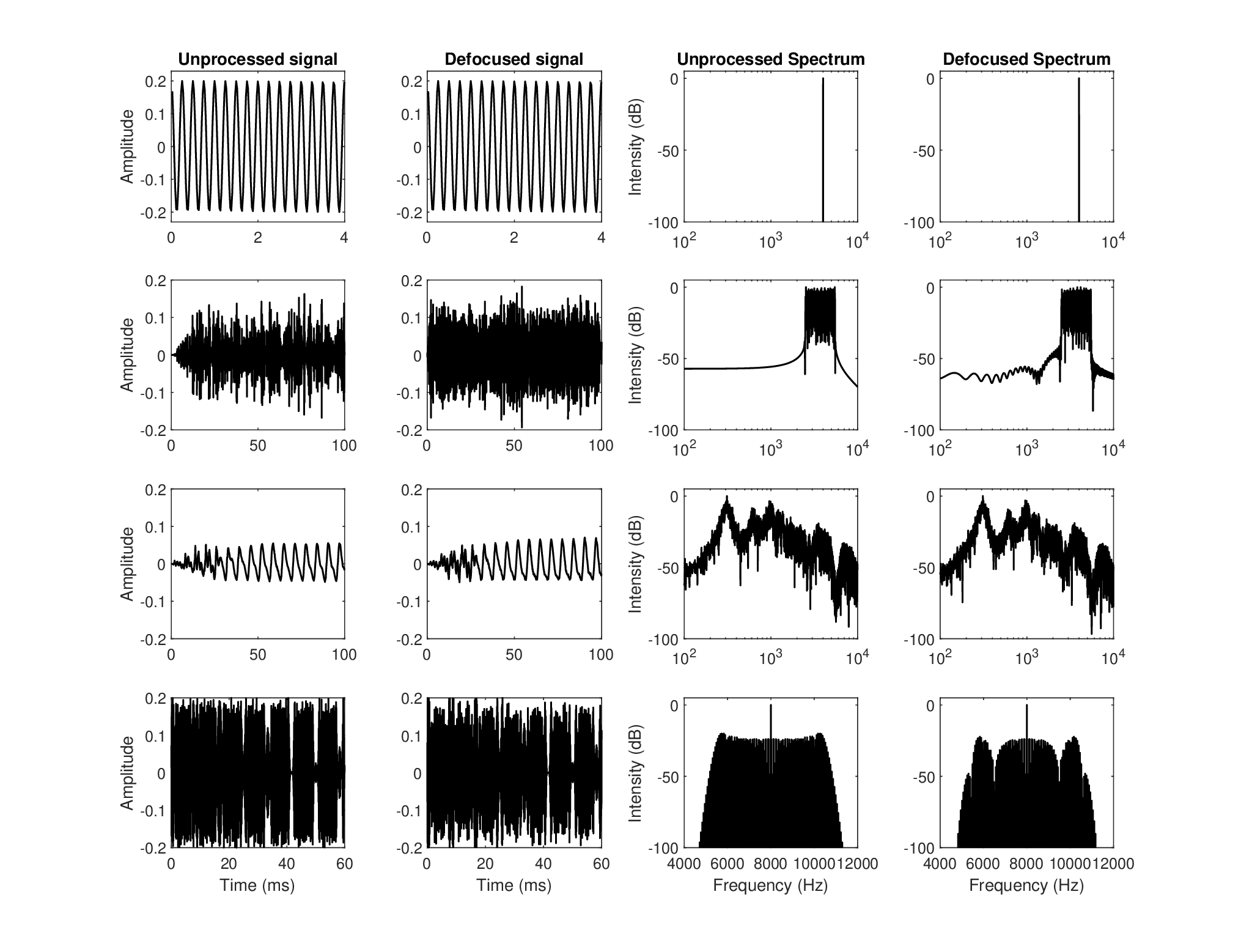

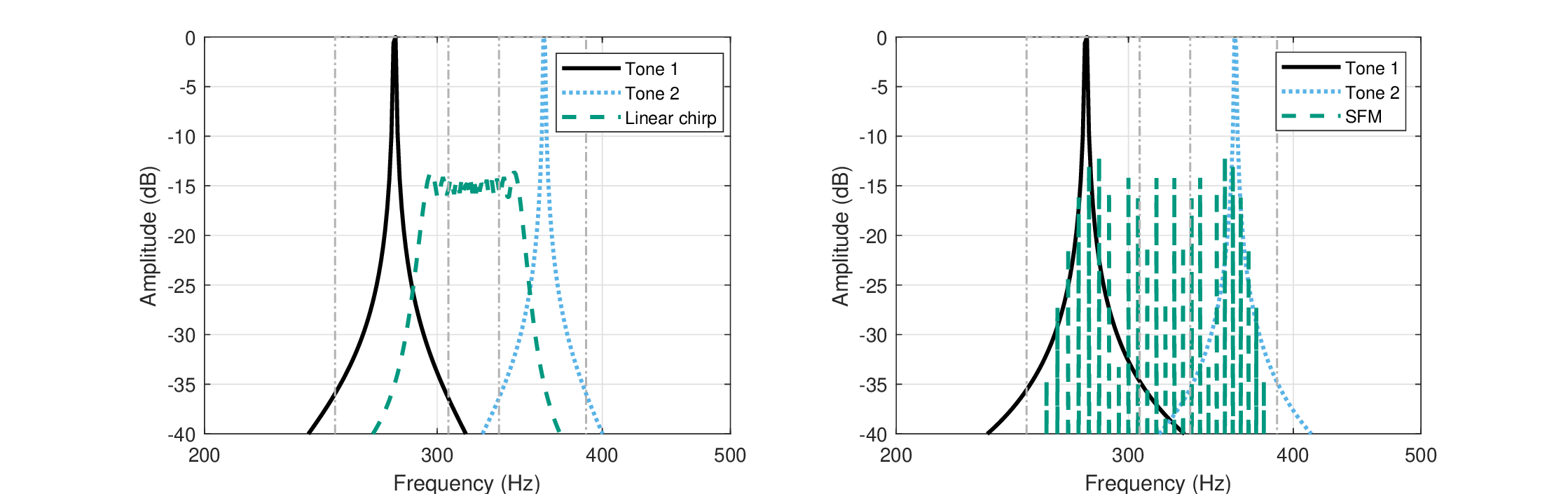

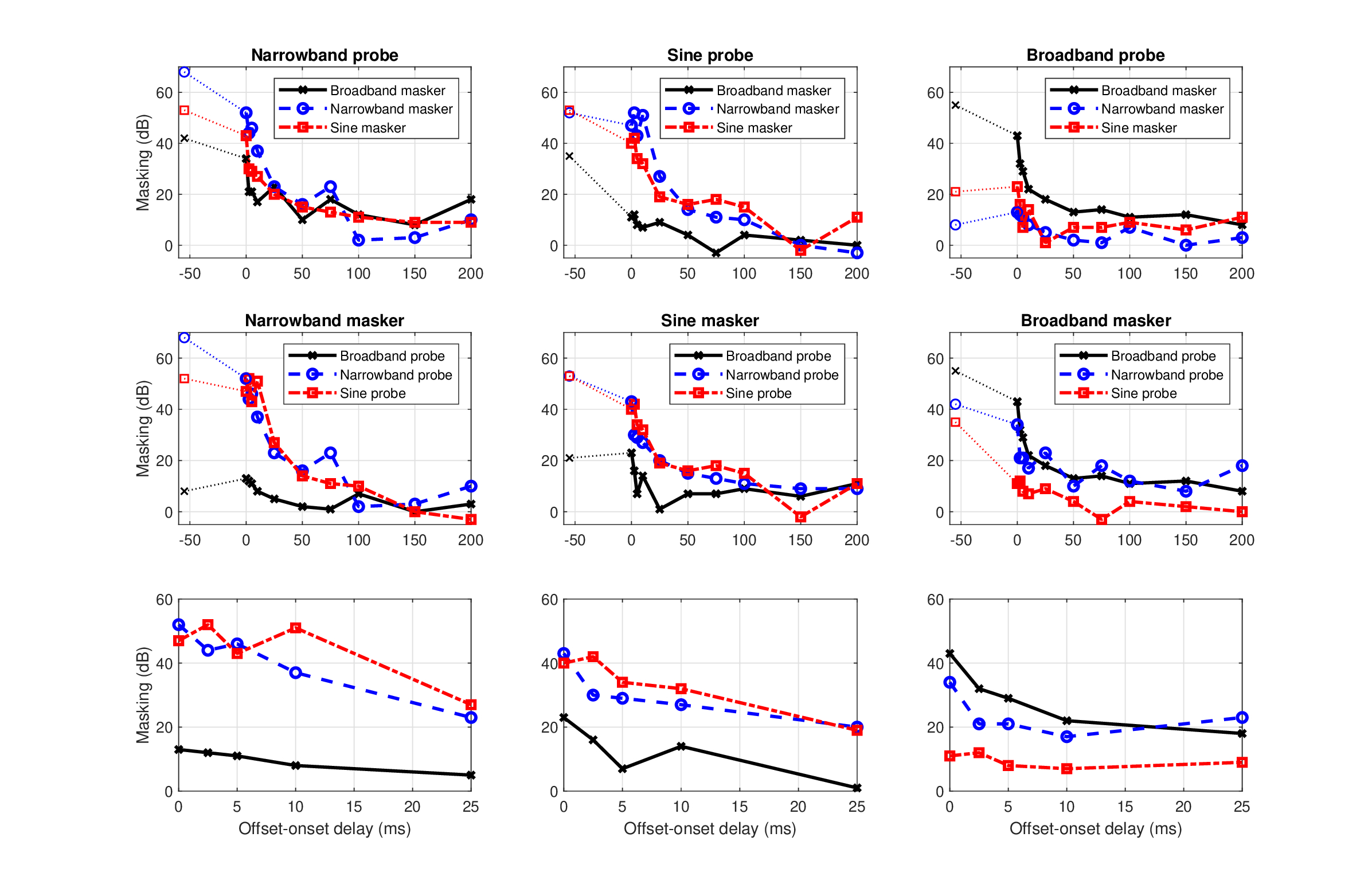

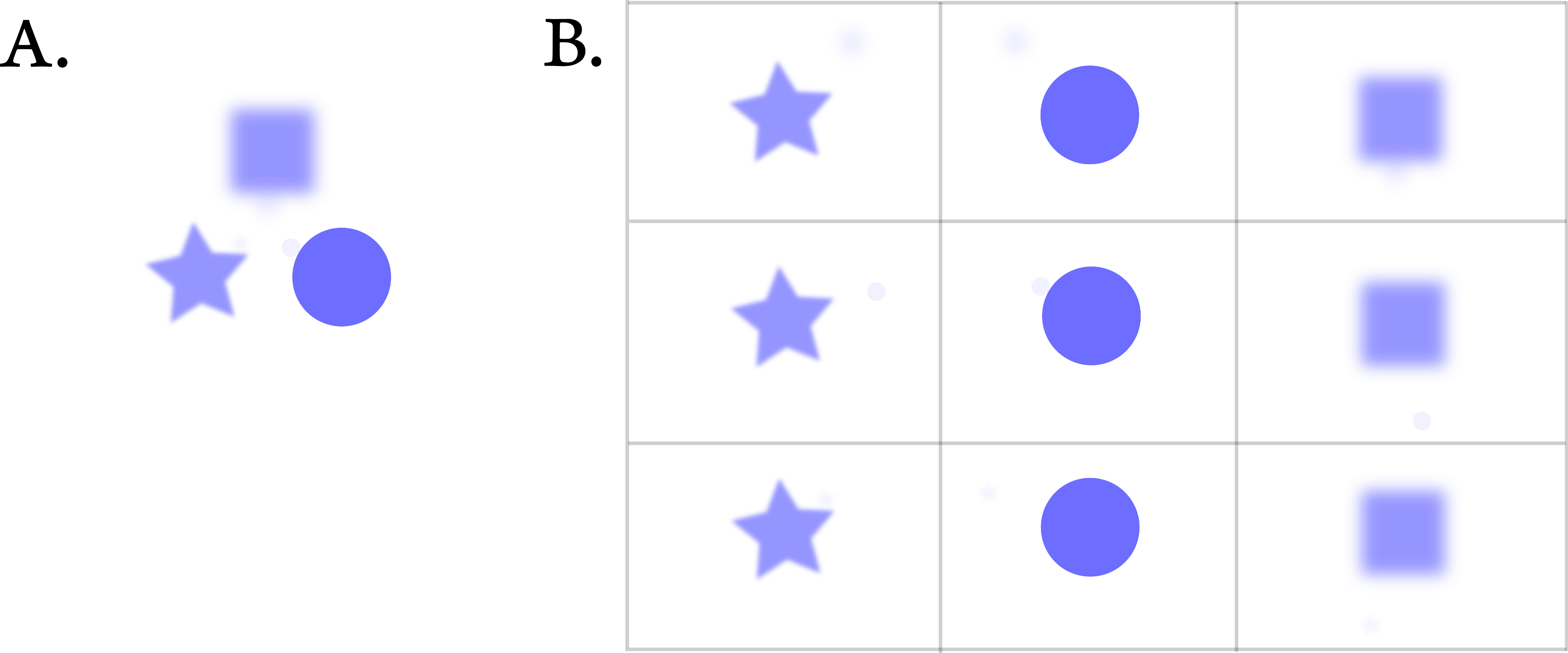

Several signal types are displayed in Figure 15.2 with and without the effect of the estimated auditory ATF142. The responses were obtained by generating a complex temporal envelope and multiplying it in the frequency domain with the ATF143. Critically, the negative frequencies of the modulation domain were retained in sound processing. The most coherent and narrowest signals are also the ones that are unaffected by the defocus and by the MTF on the whole, as its low-pass cutoff is higher than half the signal bandwidth (for signals whose carrier is centered in the auditory filter). The effect of the aperture low-pass filtering is illustrated using the different signals—notably their amplitude-modulation (AM). The defocus (i.e., its quadratic phase) directly affects the signal phase and any frequency-modulated (FM) parts (see caption in Figure 15.2 for further details). Both the narrowband noise and the AM-FM signal were deliberately designed to have relatively broadband spectra, so to emphasize the dispersive effect. In all cases but the pure tone, it is the author's impression that the defocused version may be considered less sharp than the unprocessed versions, although the effect is not the same in the different cases. The narrowband noise sounds narrower and with less low-frequency energy, which raises the perceived pitch of the filtered noise. The speech sample sounds duller, but the effect is subtle with the neural group-delay dispersion value obtained. The FM sound is made duller after filtering, and its pitch is pushed both lower and higher than the pitch of the unfiltered version. However, to simulate a more correct auditory defocusing blur may require tweaking of the parameters (neural dispersion, temporal aperture, or filter bandwidth) and more importantly, to apply nonuniform sampling that resembles adaptive neural spiking and that captures the low-pass filtering and decohering effects that it may have after repeated resampling and downsampling (§14.8).

Figure 15.2: The effect of the auditory defocus on four signals of different modulation spectra and levels of coherence. Each row corresponds to a signal type, where the leftmost plot is the unprocessed time signal, the second from the left is the defocused time signal obtained by multiplying its modulation spectrum with the complex ATF (see Figure 15.1), the third is the power spectrum of the unprocessed signal and the rightmost plot is the power spectrum of the defocused signal. The first row is a 4 kHz pure tone, which is unaffected by the modulation filter. The second row is a rectangular-shaped narrowband noise centered at 4 kHz with 3 kHz total bandwidth. The processed and unprocessed sounds were RMS equalized to make their loudness comparable. The audible effect of the defocus filter is subtle, as it slightly lowers the pitch of the noise, and it is caused by both the aperture and quadratic phase. The third row is taken from a 2 s long male speech recording in an audiometric booth (only the first 100 ms are displayed) that was bandpass-filtered around seven octave bands (125–4000 Hz, 4-order Butterworth, bandwidth equal to the equivalent rectangular bandwidth (ERB) (Eq. §11.17) (Glasberg and Moore, 1990). The unprocessed version was obtained by using Hilbert envelope and phase as a complex envelope to modulate a pure tone carrier in the respective octave band and the total signal is the summation of all seven bands. The defocused version was the same, but the complex envelope was filtered in the modulation frequency domain by the ATF before modulating the carrier. The audible effect was not dominated by the quadratic phase itself, but rather by the aperture, which results in a thinner sound. The last row is of a strongly modulated 8 kHz carrier, whose AM-FM envelope is: \(1+\sin\left[2\pi 50t + 40\cos(2\pi 60t)\right]\). Once again, the audible effect is subtle and makes the defocused sound slightly muffled and lower-pitched, although this time it was caused mainly by the quadratic phase. Refer to the audio demo directory /Figure 15.2 - auditory defocus/ to hear the corresponding examples.

It appears that the auditory defocus tends to interact with sounds that are clearly broadband. When they are not resolved to narrowband filters, these sounds potentially contain high modulation frequencies that may be affected either by the low-pass amplitude response or by the phase of the ATF. The narrowband noise In Figure 15.2 is an example for such a sound, whose random variations cause instantaneous phase changes that map to very high nonstationary FM rates.

The prominence of defocus in listening to realistic sound environments is unknown at present. The most useful portion of the (real) modulation spectrum of (anechoic) speech is well-contained below 16 Hz (Drullman et al., 1994a) mostly for consonants, whereas temporal envelope information for vowels was mostly contained below 50 Hz (Shannon et al., 1995). Using the estimated auditory dispersion parameters from §11, such a modulation spectrum is unlikely to be strongly affected by group-velocity dispersion alone, which may have a larger effect on the FM parts of speech in case they can be associated with higher instantaneous frequencies. This conclusion is somewhat supported by the crudely processed speech example of Figure 15.2, which disclosed only a subtle blur compared to the unprocessed version. Nevertheless, the analysis in the final chapters of this work will suggest that the role of defocus is greater than initially may appear from the examples that were given above.

15.6 The temporal resolution of the auditory system

15.6.1 Temporal acuity

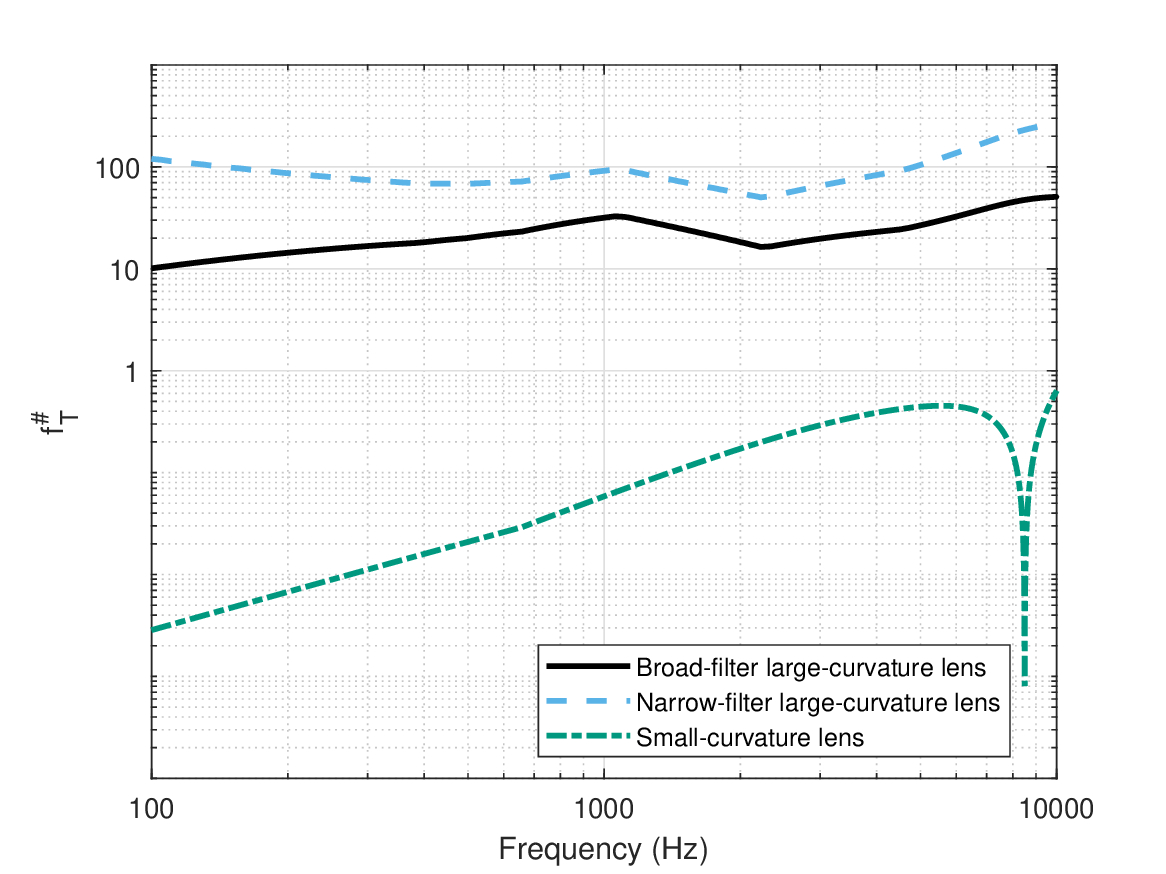

One of the most basic aspects of any imaging system is its resolving power, which quantifies the smallest detail that can be imaged distinctly from adjacent details. In spatial imaging, it is intuitively conveyed by the point spread function, which converts a point in the object plane to a disc with a blurred circumference in the image plane. The size of the disc is minimal when the system is in sharp focus and with no aberrations, as it is only constrained by the blurring effect of diffraction. An analogous effect is obtained using the impulse response function in temporal imaging, only that the limiting effect is caused by group-velocity dispersion instead of diffraction. Using the estimated system parameters, it is possible to use the impulse response function of Eq. §13.20 to compute the theoretical temporal resolution values at different frequencies.

There are several established criteria in optics for imaging resolution based on two-dimensional patterns (usually assuming circular or rectangular apertures). The most famous one is the Rayleigh criterion, which is based on the image of two object points. When the center of one imaged point falls on the first zero of the diffraction pattern (an Airy disc) of the second point, the two points are just about resolved (Rayleigh, 1879a; Goodman, 2017; pp. 216–219; §4.2.2). There is no standard criterion in temporal imaging, although Kolner (1994a) suggested one for a system with a rectangular aperture in sharp focus. In analogy to spatial imaging, he equated the location of first zero of the corresponding impulse response sinc function to the spacing required for resolving two impulses.

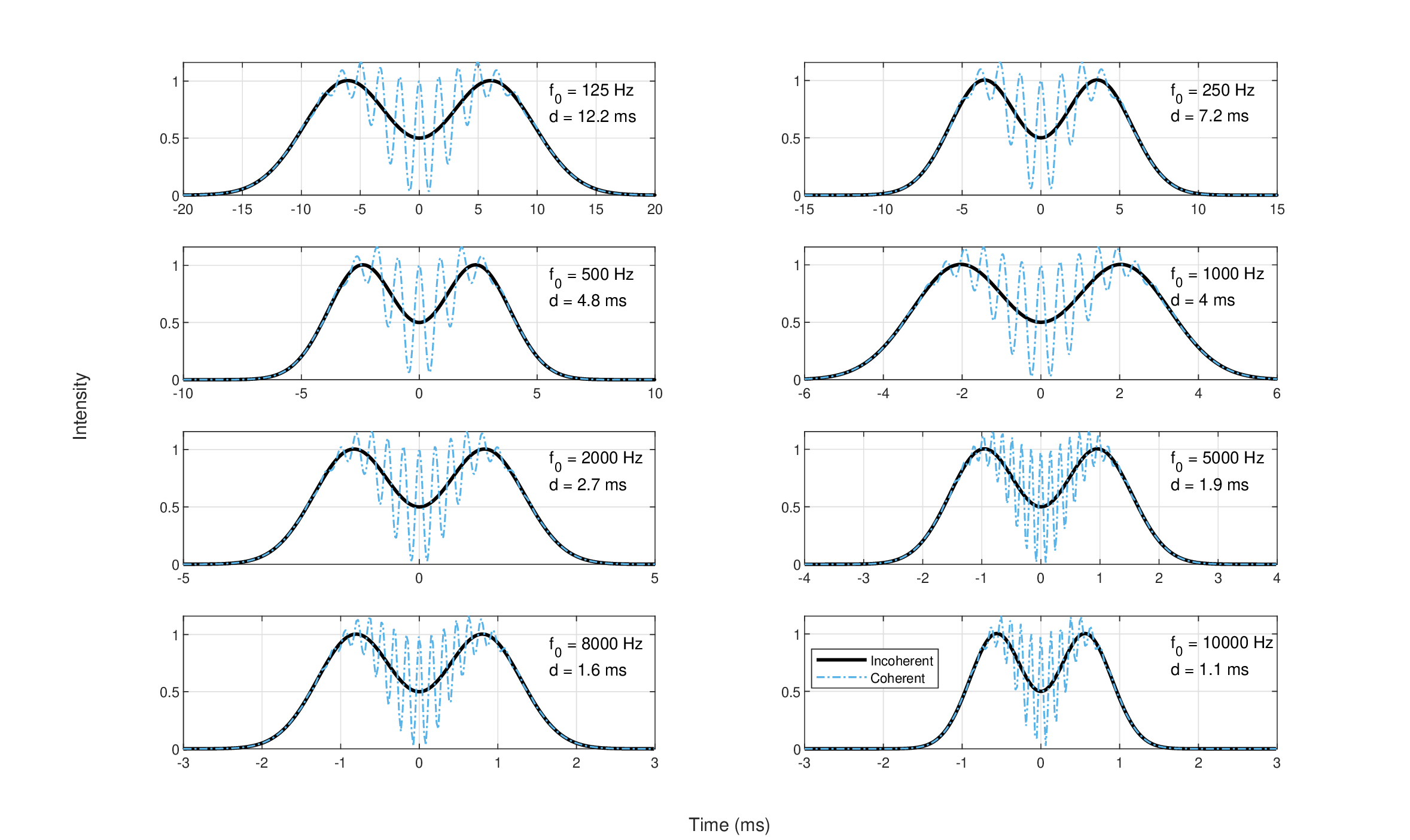

As the present work employs a Gaussian aperture shape—an unphysical shape that has no zeros, but is convenient to work with analytically and appears to correspond to the auditory pupil shape—the criterion here will be somewhat more arbitrary. We shall consider a sequence of two impulses as resolved if their responses intersect at the half-maximum intensity. Using the auditory system parameters found in §11 and the generalized (defocused) impulse response for a Gaussian aperture (Eq. §13.20), the resolution at different spectral bands can be readily obtained by using an input of the form \(\delta(-d/2) + \delta(d/2)\), for two pulses separated by a gap of \(d\) seconds, measured between their peaks. The gap duration can be calculated by convolving the impulse response with a delta function and finding the time \(d/2\) at which the respective intensity drops to a quarter of the maximum. The two incoherent pulses then intersect at half the maximum level. After some algebraic manipulation, we obtain the gap duration:

| \[ d = v T_a\sqrt{\left(\frac{16\ln2}{T_a^2}\right)^2 + \left(\frac{1}{u} + \frac{1}{v}+ \frac{1}{s} \right)^2 } \] | (15.1) |

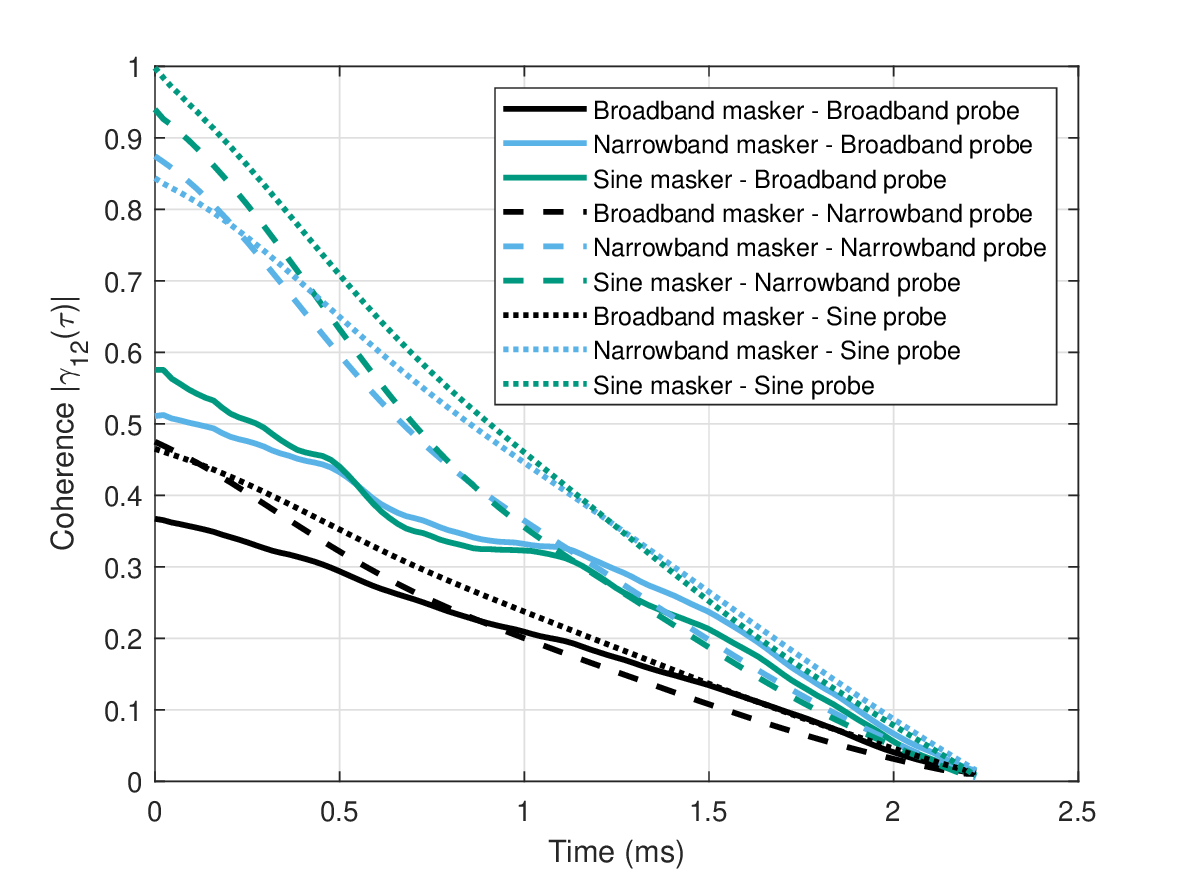

In order to obtain the intensity response for the incoherent case, the response to each pulse was squared independently (Eq. §13.48): \(I = I(-d/2) + I(d/2)\) (black curves in Figure 15.3). In the coherent case (Eq. §13.46), the summation preceded the squaring: \(I = [a(-d/2) + a(d/2)]^2\), which is displayed in blue dashed lines of Figure 15.3.

As delta impulses are incoherent by definition, the coherent responses of Figure 15.3 are shown more for academic interest, although short Gabor pulses produce very similar results (see §F). The coherent pulses give rise to visible interference (fast oscillations) in the final intensity pattern, which does not match known auditory responses. These oscillations disappear upon increasing the gap \(d\), so that \(1.1d\) produces a more visible gap in the interference pattern, which almost vanishes completely at \(1.4d\) (some oscillations are visible in the trough). The incoherent pulses produce smooth responses that are more easily resolved using the chosen criterion, which is also how the resolution limits were determined. The response was extended up to 10 kHz, but above this frequency the estimates of the cochlear dispersion could not be trusted (see Figure §11.6).

Figure 15.3: The temporal resolution of two consecutive impulses spaced by \(d\) milliseconds, according to the impulse response function of Eqs. §13.20 and 15.1 and the parameters from §11. The spacing was chosen so that pulses are considered resolved when their (summed) intensity image is exactly at half of the peak value. The black solid curve shows the incoherent pulse response. The blue dash-dot curves show the “coherent impulse responses” as a demonstration of the effect of the phase term in the defocused impulse response function. The oscillations are a result of interference, which subsides when the gap is increased.

The results in Figure 15.3 represent the output of a single auditory channel and should be compared to narrowband temporal acuity data from literature, if available. In auditory research, temporal acuity assessment and definition is challenged by the fact that multiple cues (e.g., spectral, intensity) can account for just-noticeable differences between stimuli. Therefore, different methods have been devised to obtain specific types of acuity, which are not always consistent with each other (Moore, 2013; pp. 169–202). As a rule of thumb, the auditory system is able to resolve 2–3 ms (Moore, 2013; p. 200), which corresponds well only to the obtained resolution at 2 kHz (2.7 ms) and maybe at 4 and 8 kHz (1.8 and 1.6 ms, respectively) and 1 kHz (4 ms). At low frequencies, the resolution drops (12.2 ms at 125 Hz, 7.2 ms at 250 Hz, and 4.8 ms at 500 Hz), but less so than it would have dropped had the temporal aperture remained uncorrected (i.e., if we let it be unphysically long; see §12.5.2). The 4 and 8 kHz predictions are a bit shorter than the data we obtained using Gabor pulses (see §E.2.2), which had a median acuity of 2.3–3.4 ms at 6 kHz, and 2.8–4.2 ms at 8 kHz, with the two best performing subjects having 1.8–2.7 ms and 2.1–4 ms, respectively. The two lowest frequencies as well as frequencies above 8 kHz are particularly susceptible to errors, because of greater uncertainty in the dispersion parameters and temporal aperture144. Such frequency dependence of the temporal resolution has usually not been observed in past studies that employed narrowband noise (Green, 1973; Eddins et al., 1992; e.g.,][), but values as short as obtained here at high frequencies are typical with broadband stimuli (e.g., Ronken, 1971). However, gap detection (\(>\) 50% threshold) using sinusoidal tones in (Shailer and Moore, 1987; Figures 2–3) was 2–5.5 ms at 400 Hz, 2.5–3.5 ms at 1000 Hz, compared to predicted gap thresholds of 4.1 ms (not plotted) and 3.6 ms, respectively.

Implicit to this discussion is that the built-in neural sampling of the auditory system can deal with arbitrarily proximate pulses. This is probably true for broadband sounds that stimulate multiple channels along the cochlea, but may be a stretch for narrowband sounds, whose response depends on fewer fibers145. At least in the bandwidth for which data are available, this assumption appears to be met.

This gap detection computation was based on a Gaussian-shaped pupil function, which appears to be approximately valid (§12.5). However, we do not know the actual pupil function shape in humans, which may eventually alter these estimates to some extent.

15.6.2 Envelope acuity

While the temporal acuity as quantified above defines the shortest sound feature that can be resolved within a channel, such a fine resolution of 2–3 ms is not generally found in continuous sound, where the acuity tends to significantly degrade. This was measured in Experiments 4 and 5 in §E with click trains that included 8 or 9 clicks, to reduce the onset effect and induce some pattern predictability in the listener. When these short events are interpreted as modulations, they suggest a drop in the instantaneous modulation rate from 300–600 Hz to 60–100 Hz. This suggests that the bandwidth of the various psychoacoustic TMTFs may not necessarily reflect the fidelity as it relates to the specific contents of the temporal and spectral envelopes.

Several studies can attest to this assertion. For example, the discrimination between amplitude-modulation frequencies has been tested a few times with tonal, narrowband noise, and broadband noise carriers (e.g., Miller and Taylor, 1948; Buus, 1983; Formby, 1985; Hanna, 1992; Lee, 1994; Lemanska et al., 2002). Roughly, these measurements reveal that the discrimination is fairly good (about 1–2 Hz) for low modulation frequencies (below 20 Hz) and gets gradually worse at high modulation frequencies (approximately 20 Hz for 150 Hz modulation, for unresolved modulations). This repeats for different carrier types and frequencies and does not vary much between listeners. Such findings support the model of an auditory modulation filter bank that has broadly tuned bandpass filters, which get broader at higher modulation frequencies, similar to the critical bands in the audio domain (Dau et al., 1997a; Dau et al., 1997b; Ewert and Dau, 2000; Moore et al., 2009). A more recent study demonstrated how listeners are not particularly sensitive to irregularities in the temporal envelope, which were superimposed at lower frequencies than the regular AM frequency under test (Moore et al., 2019). The results of this experiment suggest that fluctuations in the instantaneous modulation frequency can go unnoticed by many listeners, who perceive a relatively coarse-grained version of the envelope. However, the individual variation in this experiment was large.

Since we defined blur earlier as a change in the contents of the temporal, and hence in the spectral envelope, then these studies suggest that the system may not be particularly sensitive to blur in the modulation domain, at least in conditions of continuous sounds, as opposed to onsets or impulsive sound. In a way, this perception may represent a form of internal blur that corresponds to a tolerance that the auditory system has to different complex stimuli.

15.7 Polychromatic images

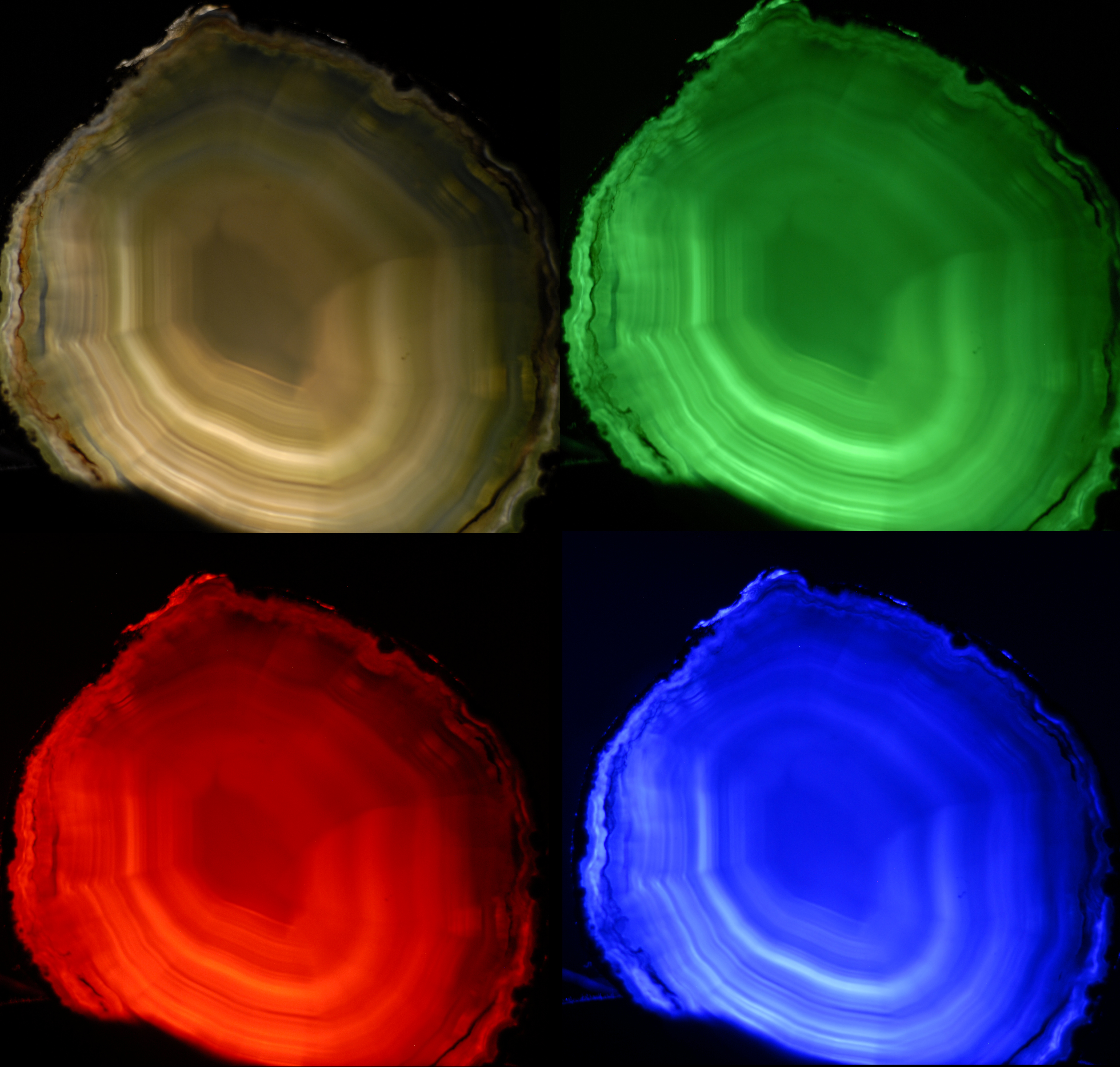

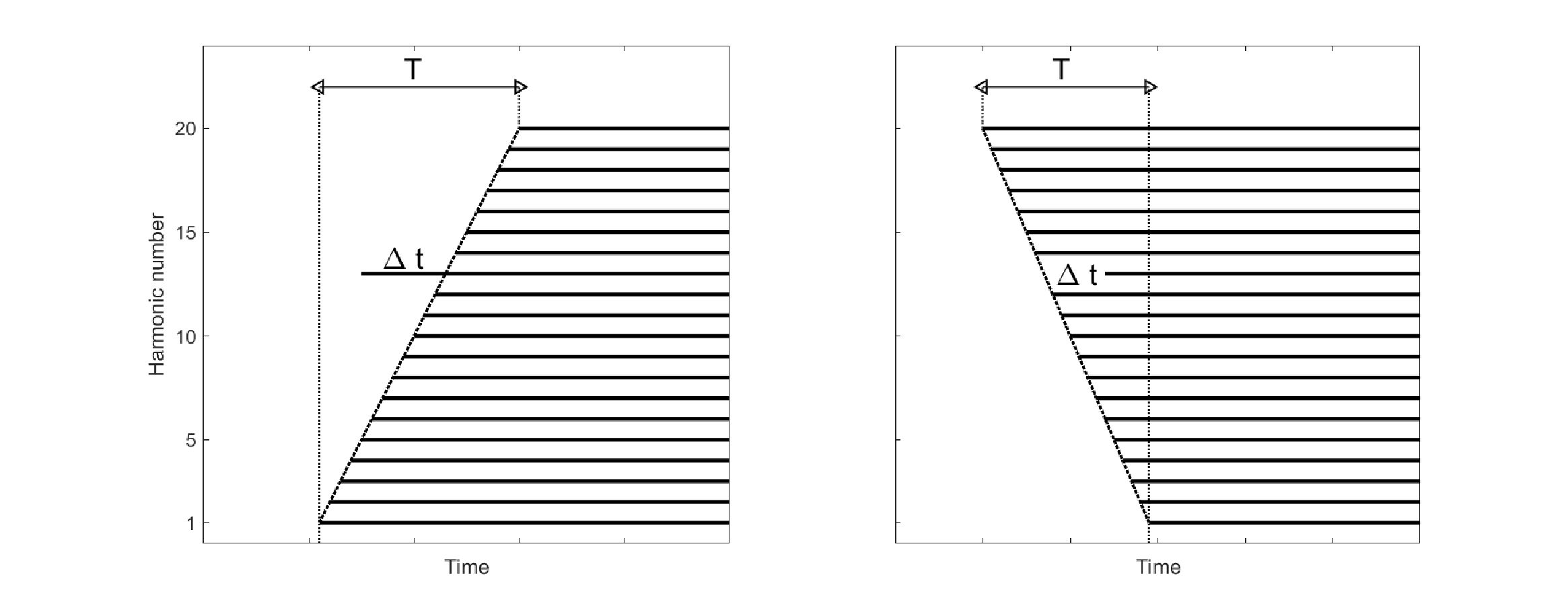

So far, the discussion and analysis of the auditory temporal images were done within a single narrowband channel. However, the modulation in real acoustic objects tends to affect multiple vibrational modes and is rarely limited to narrowband vibrations (single modes). For example, during a drum roll, the modes of vibrations of the drum get excited together—they are comodulated—in a unique pattern of sound. More complex sounds such as speech also show high correlation across the spectrum in different modulation frequency bands—especially below 4–6 Hz (Crouzet and Ainsworth, 2001). The analogous optical situation is of an object that is lit by white light, whose spatial modulation spectra—one spectrum per color channel—are highly correlated as a result of being reflected from the same object, whose geometry affects all wavelengths nearly equally. The imaged object is then sensed as a superposition of very similar images of different primary colors, which can be processed by the corresponding cone photoreceptors (Figures 15.4 and 15.5). Importantly, the images created by the photoreceptor channels spatially overlap in a way that facilitates the perceptual reconstruction of the original object. In analogy, we would expect that the broadband auditory image would stem from a perceptual re-synthesis of temporally modulated narrowband images in different channels, which represent the acoustic object response as a whole. This across-channel broadband image is referred to as polychromatic—an adjective that distinguishes it from a mere broadband sound, for which the identity of the constituent monochromatic channels may be inconsequential .

Figure 15.4: Four images of a polished agate rock that is back-lit by an LED incoherent light source with variable colors. The top-left image is illuminated with white light (full spectrum) that produces the polychromatic image. The other three images are all monochromatic. Different details of the agate surface are emphasized under each light. More technical details about the LED sources are in Figure §8.3.

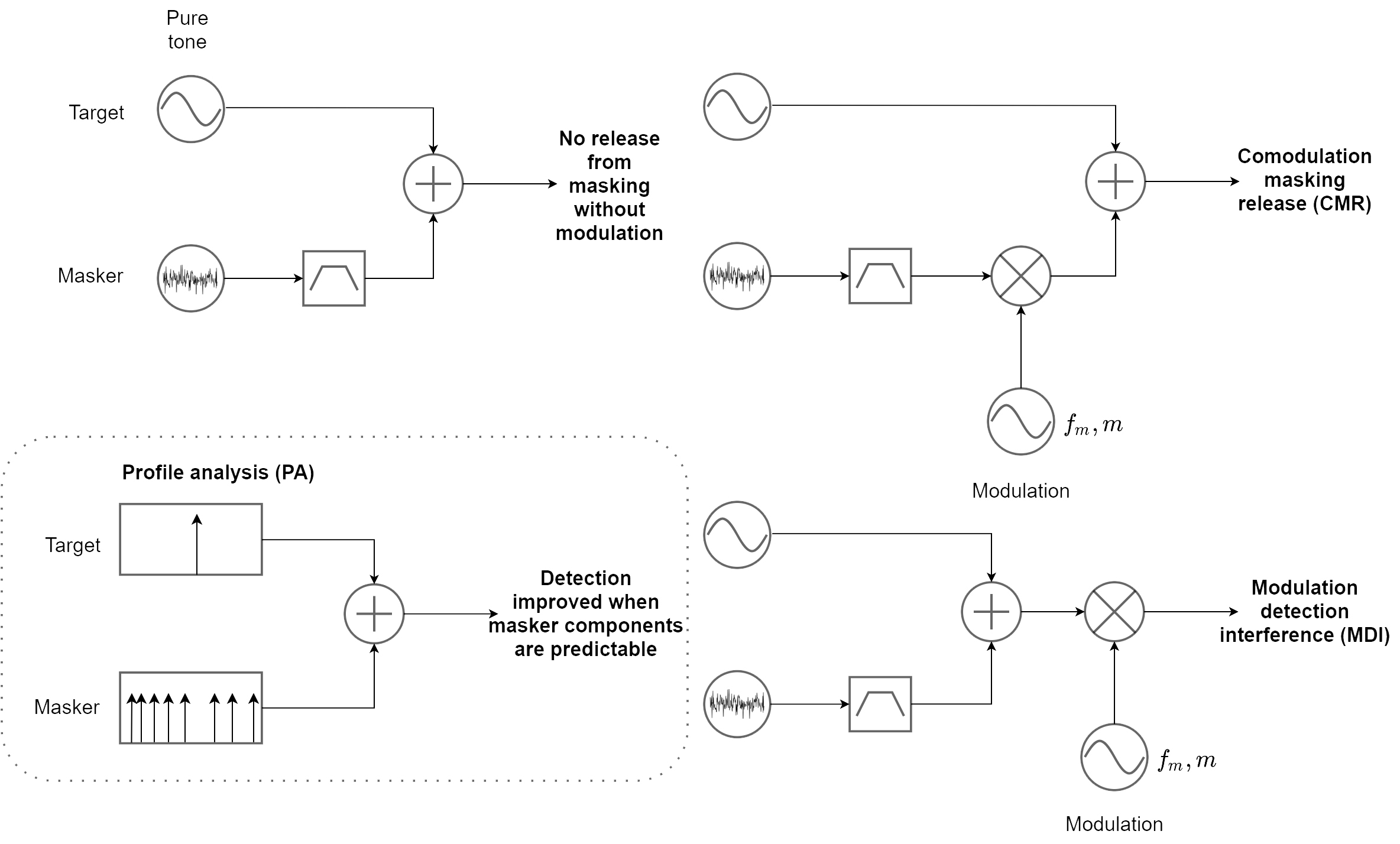

That the auditory system indeed combines across-channel modulation information as generated by common events has been repeatedly demonstrated in several effects—most famously, through the phenomenon of comodulation masking release (CMR; Hall et al., 1984). Normally, when a target tone is embedded in unmodulated broadband noise, its detection threshold increases as the noise occupies more of their shared bandwidth within a single auditory channel. The strength of this masking effect depends on the noise bandwidth as long as it is within the channel bandwidth, but is unaffected by noise components that are outside of the channel. However, if the noise is extended beyond the channel bandwidth and is also amplitude-modulated (not necessarily sinusoidally), then the masking effect decreases—information from adjacent and remote noise bands is used by the auditory system to release the target from the masking effect of the noise (see top plots in Figure 15.6). The effect is robust and has been shown to yield up to 10–20 dB in release from masking, depending on the specific variation (Verhey et al., 2003; Moore, 2013; pp. 102–108). The effect is also more or less frequency-independent, as long as the bandwidth of the noise is scaled with reference to the auditory filter bandwidth of the target (Haggard et al., 1990).

CMR has been interpreted as a form of pattern recognition and comparison across different bands of the signal, which is representative of real-world regularities of sounds (e.g., Hall et al., 1984; Nelken et al., 1999). As such, it is also considered an important grouping cue in auditory scene analysis (Bregman, 1990; pp. 320–325), which is effective as long as the different comodulated bands are temporally synchronized (or “coherent”, in the standard psychoacoustic jargon, §7.2.4) (Christiansen and Oxenham, 2014). In stream formation, temporal coherence (of the envelopes) can act as a strong grouping cue that binds across-frequency synchronized tones, but not asynchronous tones whose onsets do not coincide (Elhilali et al., 2009).

Figure 15.5: An illustration of a polychromatic image decomposed into three monochromatic color channels. The image is a spatial modulation pattern carried by incoherent broadband light, which is detected in three narrowband channels in the retina by red, green, and blue photoreceptors (long, medium, and short wavelength cones, respectively). The three monochromatic images are very similar, but some object details are not observable in all of them. For example, the birds' eyes appear to be almost uniform in blue light, whereas the existence of the iris and pupil can be seen most clearly in red and much less clearly in green.

There has been only one physiological demonstration of CMR at early processing stages (Pressnitzer et al., 2001). Spiking pattern correlates of CMR were found in the guinea pig anteroventral cochlear nucleus (AVCN) units—mainly of the primary-like and chopper-T types. In order for this effect to work, low-level integration is required that relies on the modulated masker in different channels to be in phase. The authors modeled the results using a multipolar broadband unit that receives excitatory off-frequency inputs, which in turn inhibits a narrowband in-channel unit—inhibition that results in masking release. The existence of a broadband processing stage is supported also by psychoacoustic data, which ruled out a model of across-channel comparison of the different narrowband envelopes (Doleschal and Verhey, 2020). Furthermore, the CMR model of Pressnitzer et al. (2001) was successfully used to demonstrate how across-channel information may be advantageous in consonant identification under different conditions (i.e., in noise or when the temporal fine structure was severely degraded; Viswanathan et al., 2022).

Other effects exist that demonstrate the polychromatic auditory image primacy over spectral mechanisms, as the system prioritizes temporal cues of multiband signals at the apparent expense of unmodulated narrowband target signals. For example, in modulation discrimination interference (MDI), an amplitude- or frequency-modulated masker causes the decrease in detection sensitivity of a similarly modulated target at a distant channel (Figure 15.6, bottom right) (Yost et al., 1989; Wilson et al., 1990; Cohen and Chen, 1992). Thus, the modulated target cannot be easily heard as being separate from the masker. However, FM elicits a more limited MDI effect that does not always provide sufficient resolution across channels and modulation patterns, at least at high modulation frequencies (e.g., Lyzenga and Moore, 2005). Profile analysis is another phenomenon, whereby the detection of a level change of one of the components of a multicomponent masker depends on the entire across-frequency profile of the masker (Figure 15.6, bottom left) (Spiegel et al., 1981). The detection of a single-component target improves when the masker has known frequencies compared to when there is some uncertainty in its component frequencies. The spectral profile is often modeled as spectral modulation (e.g., Chi et al., 1999).

It may be argued that in all three effects mentioned—CMR, MDI, and profile analysis—the experimenters' designation of signal and noise (or target and masker) is incongruent with what the auditory system determines. Once the system identifies a potential across-frequency image, it attempts to optimize it as a whole, rather than as a loose collection of monochromatic images.

Figure 15.6: Three psychoacoustic paradigms that entail broadband information integration, beyond a single auditory channel. On the top left, the standard paradigm is shown of a target tone in masking noise. The bandpass filter symbol indicates that the masker bandwidth is a parameter in these experiments. Modulation is indicated with a sinusoidal source of frequency \(f_m\) and depth \(m\), which multiplies the noise or signal and noise. Multiplication is indicated by the mixer (cross sign).

The importance of the polychromatic representation in an ensemble of monochromatic channels may be gleaned from a study in cochlear implant processing by Oxenham and Kreft (2014). The authors showed how, unlike normal-hearing listeners, the speech-in-noise performance was identical for the cochlear-implant users regardless of the type of masker used: broadband Gaussian noise with random fluctuations, broadband tone complex with the same spectral envelope as the broadband noise, and the same tone complex with the superimposed modulation of the Gaussian noise. It was found that this undifferentiated pattern is not caused by insensitivity to temporal fluctuations, but rather by spectral smoothing, as the cross talk between the implant electrodes within the cochlea causes the different channel envelopes to mix across the spectrum. This was shown from speech intelligibility scores of normal-hearing listeners, who could no longer take advantage of the fluctuation difference between masker types, after the temporal envelopes extracted from 16 channels were summed and identically imposed on all 16 channels.

These phenomena and others may all attest to the image dominance, where modulation is involved, compared to unmodulated isolated sounds146. The CMR effect originally appeared to violate the critical band theory, which predicts that only information within auditory filters should be fused. However, by analogy to vision, this effect may be predicable if the auditory system “overlays” multiple monochromatic images to produce a single polychromatic image, or a fused or coherent stream, according to auditory scene analysis. MDI too is consistent with the system trying to form auditory images (“objects” in Yost et al., 1989) from a common acoustic source. Unlike vision that has its three monochromatic detectors interwoven in the same spatial array on the retina, overlaying the auditory imaging has to be done in time. Such a mechanism has been discussed at length with regards to periodicity in the inferior colliculus (periodotopy; Langner, 2015), which is nevertheless more restricted than general modulation patterns that are not necessarily periodic.

As a final note, it should be emphasized that some broadband sounds may not be amenable to representation as polychromatic images, if they vary across channels and time in an independent manner across channels.

15.8 Pitch as an image

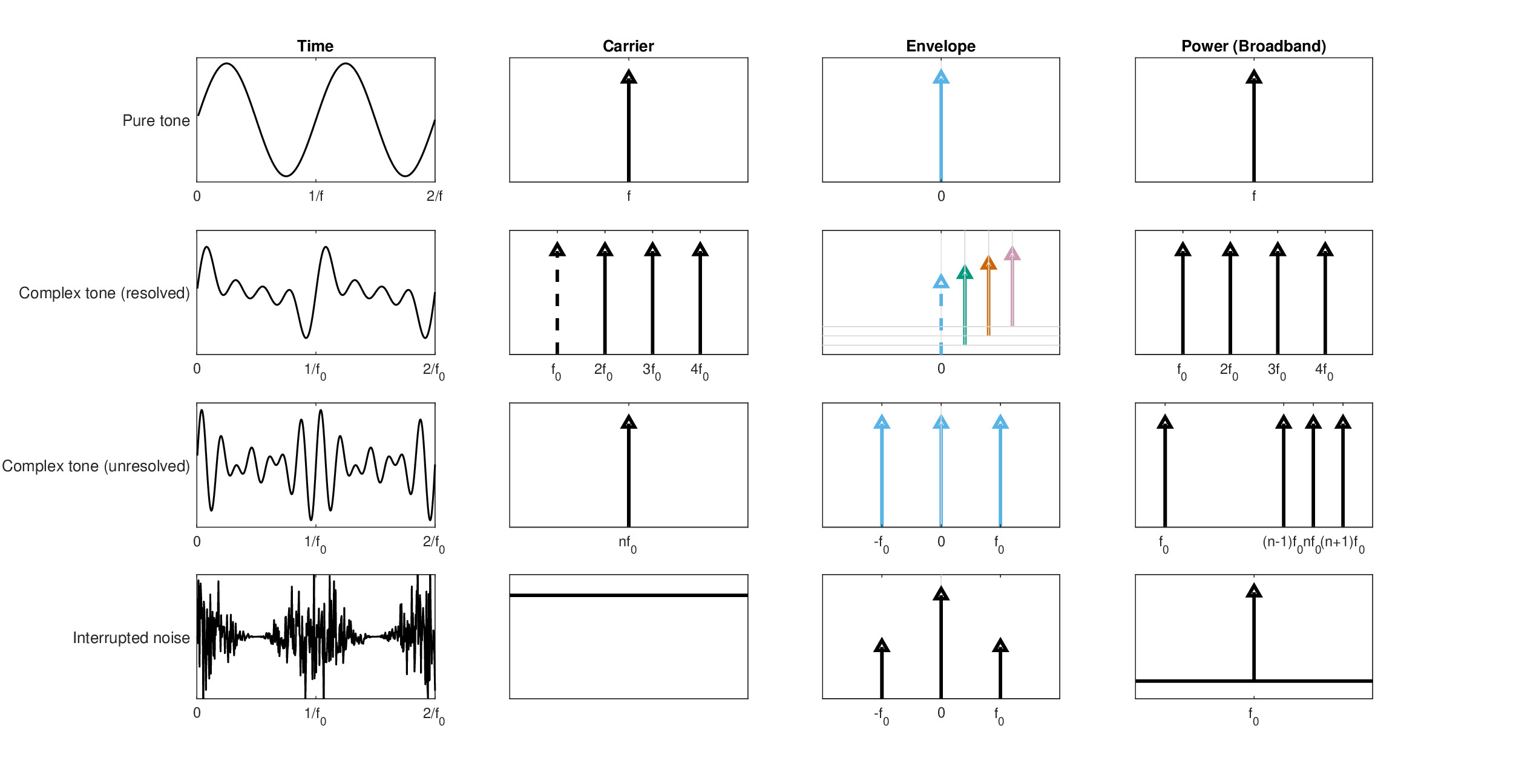

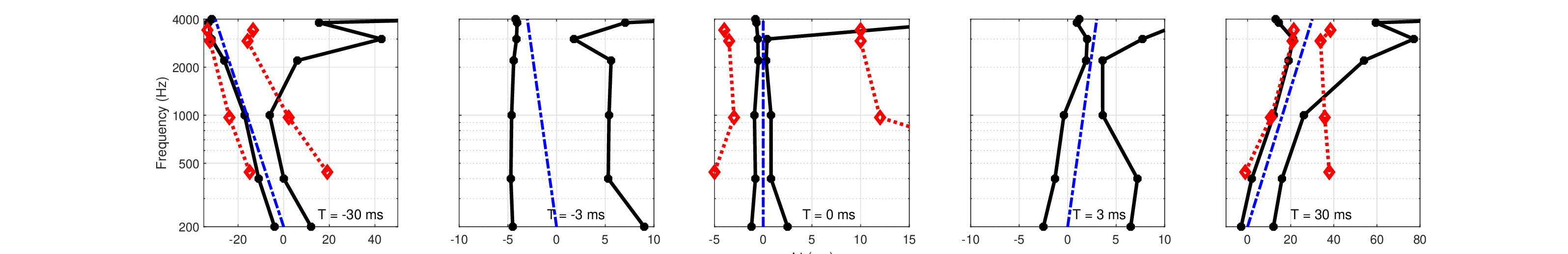

It is worth dwelling briefly on tones and pitch, which have captured the spotlight of auditory research throughout most of its history in one form or another. This section is not concerned directly with how the pitch is determined within the auditory system as a function of the stimulus properties, or in which frequency range each of the pitch types exists. It does, however, attempt to illustrate how the idealized auditory image is related to this standard stimulus family, in order to elucidate interrelated aspects in the pitch and imaging theories. Different pitch types are analyzed using three spectra—of the carrier (through a filter bank), the envelope (or baseband), and the power spectrum that contains the same information as the broadband autocorrelation. The last two spectra require nonlinearity to either demodulate the signal, or generate harmonic distortion that produces the square term that explicitly reveals the fundamental.

It should be noted that while the information required to give rise to monaural pitch is available already at the level of the inferior colliculus (IC), pitch perception is most likely generated in the auditory cortex after appropriate coding transformation (Plack et al., 2014). The early availability appears to hold also more generally to nonstationary pitch, such as the fundamental frequency in speech, which is tracked at the level of the IC (Forte et al., 2017). Inharmonic complex tone pitch and binaural pitch, though, may require information that is derived after some processing that may take place at a higher level than the IC as well (Gockel et al., 2011). Therefore, these general results suggest that an image that appears at the IC can be used to infer some properties of pitch, even if it is produced and perceived further downstream. We shall use employ our own auditory image concept loosely here to relate directly to the physical stimulus, but also link it to the psychoacoustic percept, which is assumed to be directly derived from the image, at least for the simple examples used below. Cartoon spectra of four of the most common monaural pitch types are displayed in Figure 15.7 and are described below in some detail.

Figure 15.7: Cartoon spectra of four stimuli that elicit four common types of monaural pitch. Two periods of each time signal appear on the left along with three different spectra on the right. On the second column is the filter-bank spectrum that can identify the carrier. On the third column is the modulation spectrum, which is the baseband spectrum of the filter output after demodulation. This is where the monochromatic object and image reside. Finally, on the rightmost column is the power spectrum, which has to follow some nonlinearity (e.g., half-wave rectification and squaring), although here it excludes additional harmonic distortion products, for clarity. On the top row is a pure tone, which has a single component in all three spectra that makes its image degenerate. On the second row is a complex tone, whose components are individually resolved in one filter each, with its fundamental frequency \(f_0\) intact, or missing (dashed spectral lines). Its envelope spectrum contains only the DC component in each channel, which is associated with a harmonic, and is thus analogous to a polychromatic image. The power spectrum contains \(f_0\), whether it appears in the stimulus or not. On the third row is an unresolved complex tone, whose components are analyzed by a single filter, where it appears like a single component. Its ideal modulation image contains all three components, whereas the power spectrum also contains the missing fundamental (although it is heard more faintly). On the bottom row, a form of periodicity pitch—interrupted noise—is produced by amplitude-modulating white noise, which assumes some pitch if it is fast enough. The power spectrum can reveal the modulation period that is seen in the envelope spectrum, on top of the spectral distribution of the noise itself.

The pure tone is probably the most widely used and abused stimulus in all of acoustics. But what does it entail within the temporal imaging framework? As the pure tone has a real and constant envelope, its ideal image also has a constant envelope with an arbitrary magnification (gain). We hear the constant envelope along with a uniform pitch percept without perceiving the tonal oscillations. Therefore, this is an intensity image, rather than an amplitude image. Such an image is completely static (or “stable” according to Patterson et al., 1992), because it is time-invariant. It is contrasted with arbitrary acoustic objects that have time-dependent amplitude and phase functions that give rise to complex envelopes. Therefore, a pure tone is also coherent in two senses. In the classical sense, a pure tone is obtained from a perfectly (temporally) coherent signal that can always interfere with itself147. In the auditory jargon usage of coherence, as the pure tone has no beginning and no end, it is always coherent in the envelope domain as well, whose spectrum contains only a single line at zero (DC). Therefore, we can think of the pure tone as a degenerate image148. In analogy to vision, such an image would correspond to a monochromatic dot object that is fixed in space right on the optical axis and is projected as a still (spread) point at the center of the fovea.

Complex tones refer to series of pure tones with fixed spacing between their frequency components and common onsets and offsets. The series is usually harmonic, which means that the spacing between the component frequencies follows an integer ratio. It is possible to generate harmonic series so that each tone is resolved in its own dedicated auditory channel, which prevents audible beating between components from taking place. This gives rise to a series of degenerate images. But, the auditory system also extracts the periodicity of the harmonic series, which in this case corresponds to the lowest-frequency spectral line in its broadband power spectrum. Thus, while more complex, this image is still static and has a distinct pitch that corresponds to the fundamental frequency—the spacing between the components. Additionally, because of the integer ratios between the harmonics and the frequency spacing, it is also a degenerate image, but in a different sense: the fundamental frequency of the harmonic series coincides with the periodicity from the power spectrum. This gives rise to the famous missing fundamental effect, when the harmonic series excludes the fundamental—the perception that the pitch corresponds to the fundamental even when it is absent from the stimulus. There is no complete analog to this image in vision, but the periodicity spectrum is analogous to a grating, whereas each component corresponds to a color. However, we cannot represent their harmonic relations visually149\(^,\)150.

For limited harmonic series with small frequency spacing and only few components, the harmonics may not be resolvable, so they are analyzed mainly within a single channel. In general, such series can be mathematically represented as interference or beating patterns that modulate a carrier. It means that the envelope spectrum contains at least two components (i.e., a DC component at zero and at the beating frequency), whereas the carrier domain has only one. The broadband power spectrum again shows the fundamental that corresponds to a residual pitch. However, the image in itself—the temporal envelope—is monochromatic. This pitch usually produces a more faint pitch sensation than other pitch types.

Interrupted pitch is another interesting type of pitch that is produced strictly by modulating broadband noise that does not contain any tonal information. Thus, it has no distinct components in its carrier spectrum. It has nontrivial components only in its envelope and power spectra.

The objects of more realistic sounds are generally not time invariant, so they give rise to nondegenerate images. These images may have variable carriers that are more intuitively expressed using frequency modulation than using a stationary carrier spectrum with multiple components (for example, the two bottom-right plots in Figure 15.2). Realistic objects can also have nonuniform frequency spacing, which eliminates periodicity and makes the within-channel broadband envelope spectrum nonstationary as well. Complex tone objects may also be frequency-shifted—an operation that retains time-invariant carriers and envelopes in resolved channels, but produces aperiodic broadband spectra in unresolved channels (with more than two components).

Each type of pitch, therefore, reveals a different feature of the auditory system. When the corresponding spectrum or feature of the stimulus is degenerate, it elicits a stable sensation that we call pitch. Part of the multiplicity of pitch types may go back to the fact that, in general, there is no unique mathematical representation for broadband signals, so the auditory system may have had to develop ways to “corner” the signal analysis and make it sound unique by comparing information from different spectra.

Three questions can be raised following this high-level characterization of pitch. First, must all images be perceived with pitch? Second, do “pitchiness” and sharpness refer to the same underlying quality in hearing? Third, does the perception of pitch always require periodicity? According to our image definition—the scaled replica of a temporal envelope pulse—the answer is “no” to the first question, since the image appears at a more primitive processing stage than the pitch. As for the second question, the temporal auditory image refers to an arbitrary complex envelope of a single mode of the acoustic object, but the imaging condition says nothing about its duration, which is essential to elicit pitch. This situation is complex, because the perception of pitch entails sharpness. Also, increase in blur can erode the pitch of a sound, but not eliminate it. Therefore, there is a strong association between pitch and sharpness, but there are examples for pitched sounds that are not sharp (Huggins pitch is a clear one), and for sharp sounds that are not pitched (perhaps like a snappy impulse, such as a snare drum). Therefore, while it seems that the answer to this question is a cautious “no”, a more exhaustive answer probably demands further research. As for the third question, linearly frequency-modulated tones (glides) do not have a fixed pitch and they are not periodic. However, they certainly elicit a perception of pitch, albeit a dynamic one (de Cheveigné, 2005; pp. 206–207). Therefore, the answer here is negative as well.

Interestingly, the property of pitchiness, or pitch strength, is inversely proportional to the bandwidth of the signal (Fastl and Zwicker, 2007; pp. 135–148), or maybe to its coherence time that is directly dependent on the bandwidth (Eq. §8.31). So, tones have a very long coherence time, whereas broadband noise have a negligible one, and narrowband noise somewhere in between. It also indicates that the auditory system is configured to have complete incoherence only for full bandwidth inputs, which include several critical bands. The corollary is that a single channel may have residual coherence even with random narrowband noise, by virtue of its limited bandwidth. This is in line with the conclusions from literature review about apparent phase locking to broadband noise (§9.9.2) and the discussion about temporal modulation transfer functions of partially coherent signals (§13.4.5).

15.9 Higher-order monochromatic auditory aberrations

15.9.1 General considerations

Basic spatial imaging harnesses the paraxial approximation, which requires light propagation in small angles about the optical axis and perfectly spherical or planar wavefronts. In realistic optical systems, these approximations are increasingly violated the larger the light angle is and when the various optical elements are imperfect—imperfections that are collectively called aberrations. The nonideal image exhibits various aberrations that can be studied as departures from ideal imaging. It can be done through wavefront and ray analysis, by comparing the path difference of different points along the same wavefront, as it propagates in space, which should have equal optical length in aberration-free imaging. In general, all imaging systems have a certain degree of primary higher-order aberrations and the eye is no exception—something that was already recognized by Helmholtz (1909, pp. 353–376). In the design of optical systems, aberrations are eliminated or mitigated by balancing them with other aberrations in specific conditions, although this process results in the generation of yet higher-order aberrations (Mahajan, 2011).

In wave optics, the geometrical optical wavefront analysis is elaborated by the inclusion of higher-order phase effects, beyond the quadratic phase terms that characterize the diffraction integral and the lens curvature. Similarly, in deriving expressions for the time lens and group-velocity dispersive medium, the phase functions used in the theory were expanded only up to second order that implied quadratic curvature (§10), which can account for defocus and chromatic aberrations. The existence of additional phase terms that depend on higher powers of frequency or time would drive the channel response away from its ideal imaging (Bennett and Kolner, 2001). However, because the dimensionality in temporal imaging is lower than in spatial imaging, not all of the known spatial aberrations have relevant temporal analogs.

Because the auditory channels are relatively narrow and the aperture stop is very short, higher-order aberrations may be difficult to observe, or they may appear completely absent—something that reflects the paratonal approximation that is analogous to the paraxial approximation. It is not impossible that the normal functioning hearing system circumvents higher-order aberrations by having a dense spectral coverage with dedicated fine-tuned filters along the cochlea—each of which has diminishingly low aberration around its center frequency. In other words, the various auditory filters are optimal around their characteristic frequency but are overtaken by other filters away from it, off-frequency. An additional mitigating factor is that higher-order aberrations are severer for magnification values that are much different than unity \(|M| \gg 1\) or \(|M| \ll 1\) (Bennett and Kolner, 2001), whereas our system is much closer to \(M\approx1\). Finally, the defocus itself, which is a second-order aberration, may mask the smaller effects of the higher-order (third and above) aberrations. So for example, spherical aberration is symmetrical around the channel center frequency and generally results in increased blur away from the (spatial or temporal) image center, which may be masked in hearing.

To the extent that higher-order aberrations are a real concern, they may also be difficult to identify using our pupil function and, hence, the point spread function analysis (Mahajan, 2011; pp. 77–137), which we estimated only up to second order. While it was assumed for convenience that the auditory aperture is Gaussian, the single measurement that determined its shape directly, had an asymmetrical tail attached to the Gaussian from the right (the forward-time direction; §12.5). It can be expected to cause the point spread function of the system to have asymmetrical (odd) higher-order phase terms.

The implications of having higher-order phase terms can be made more concrete by closely examining the dispersive elements of the system. If the phase curvature in the filter skirts is not exactly quadratic, then various asymmetrical dispersive aberrations analogous to spatial optics may exist—coma (third-order phase term in the Taylor expansion, Eq. §10.27), and spherical aberration (fourth-order term) (Bennett and Kolner, 2001). These terms may be detrimental to perceived sound quality when the excited channel is either over-modulated (reaching high instantaneous frequencies that should be normally resolved into multiple filters), or is more simply excited off-frequency—away from its center frequency151. These situations entail that the auditory channel works beyond its paratonal approximation—well-beyond its center frequency, whose role is analogous to the spatial optical axis152.

There is little physiological evidence that directly indicates that the phase curvature of the cochlear filters is asymmetrical away from the characteristic frequency, or even not perfectly quadratic. In contrast, a few psychoacoustical studies may be interpreted as showing such an asymmetry. We briefly mention evidence to the former and then focus on evidence to the latter and offer another example of our own to demonstrate this effect.

15.9.2 Physiological evidence

There is mixed physiological evidence for an ideal quadratic phase response of the auditory system. In physiological recordings of frequency glides in auditory nerve fibers of the cat, the instantaneous frequency chirps were best fitted by linear functions that indicated a quadratic phase term only (Carney et al., 1999). In several instances the glides were not linear, but better fits could not be obtained using higher order regression, including those made with log frequency. Linearity in the instantaneous frequency slopes was generally observed in the barn owl as well (Wagner et al., 2009). In contrast, in impulse responses measured using different methods in the guinea pig, chinchilla, and barn owl, the slopes of the instantaneous frequency or the phase were usually linear only in part of the response, or they changed only well below the characteristic frequency (de Boer and Nuttall, 1997; Recio et al., 1997; van der Heijden and Joris, 2003; Fontaine et al., 2015). This may be indicative of some asymmetry in the phase response of these auditory channels.

It should be noted that these measurements consider the auditory system to be dispersive only within the cochlea. This necessarily includes one segment of the neural dispersion (i.e., the path between the inner hair cells and the auditory nerve), which may have a complex phase function in itself. Additionally, these measurements tend to treat the phase response (and the filtering in general) as time-invariant, which is questionable if the outer hair cells produce active phase modulation. Therefore, we shall look for more qualitative psychoacoustic evidence of higher-order aberrations, which includes the complete dispersive path.

15.9.3 Psychoacoustic evidence

If the normal auditory system has a temporal coma aberration, then it might be possible to observe its effect when a filter is excited asymmetrically (off-frequency) with an adequate modulation spectrum. With coma, point images are smeared asymmetrically to one side, which is also the reason for the name coma—it refers to the distinct comet-like smear of the affected image points in two dimensions. If a coma-free dispersive channel is completely uniform over its entire bandwidth, then, to a first approximation, its demodulated temporal image is invariant to how the envelope object is oriented about its center. Distortion is another type of aberration, which has not been considered in the temporal imaging literature, that is sensitive to the orientation about the center frequency. In spatial imaging it appears as uneven magnification of the image, so that its circumference is deformed in relation to its center, endowing the image with the familiar barrel or pin-cushion deformations. Unlike transverse chromatic aberration that also exhibits nonuniform magnification in the context of polychromatic imaging, distortion does not cause any blur within the monochromatic image. Just like the auditory spherical aberration, distortion aberration is presently impossible to estimate. However, the combined effect of these hypothetical temporal aberrations may be tested using off-frequency stimuli. Three examples were found in literature that support this idea are described below.